In the fast-moving world of artificial intelligence and machine learning (AI/ML), everything seems to revolve around data. Entire careers are built around data. Data engineers, data analysts and data scientists are just a few roles that gather, synthesize, process, analyze and use data to help AI/ML solve real-world problems. As the volume of data continues to grow exponentially, the management of data becomes critical and a major challenge.

Challenges in data management

Data management brings many challenges in the AI/ML space, such as:

- Governance and compliance: Data governance often must meet the requirements of state or federal compliance policies for traceability, privacy and audit

- Data lineage: Tracking all variations or modifications to data is critical in replicating complex AI/ML workflows and outputs

- Data management: Managing the lifecycle of data, how users interface with it and its storage requirements as well as using the best tooling helps maintain costs and productivity

- Knowledge and expertise: There are hundreds of AI/ML tools available to data science engineers, and many new tools are added to the AI/ML industry every day. Introducing tools that have a familiar workflow—and that are easy to understand and consume—allows engineers to focus on their business goals, rather than on learning new tools

The combination of Red Hat OpenShift AI as an AI/ML platform and data version control from lakeFS help alleviate these challenges.

Data versioning with lakeFS

The most prevalent way developers manage source code today is through Git and the use of tools such as GitHub and GitLabs. There are many other tools available for use, but most have a similar workflow. Git, however, is not intended for objects, such as large data files, tarballs, container images or AI/ML models. For those file types, object storage is commonly used, often through the use of an object storage solution that offers an Amazon S3 interface. OpenShift AI has built-in support for interfacing with S3-accessible object storage.

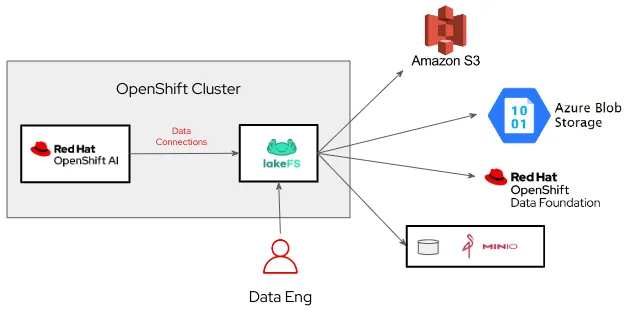

Imagine being able to manage AI/ML data, models, pipeline artifacts and other large object files in a Git-like manner, either through a web console or an API. lakeFS serves as a S3 Gateway to many different object storage solutions, including Amazon S3, Azure Blob Storage, Google Cloud Storage, Red Hat OpenShift Data Foundation, MinIO and many more. Even better, it can be easily added between the OpenShift AI cluster and an existing object storage solution with very few changes to the environment. lakeFS can be run locally in the OpenShift cluster or OpenShift AI can connect to lakeFS in another on-premise environment, public cloud or private cloud.

With lakeFS, data engineers can now create new repositories for their AI/ML data and models, create branches, make changes, merge changes and track the entire lineage of data. It offers a single, familiar interface regardless of where the data or models are stored.

Try out lakeFS with OpenShift AI

Red Hat has worked closely with the lakeFS team at Treeverse to validate the integration of Red Hat OpenShift AI with lakeFS. We replicated the fraud detection demo found within the OpenShift AI documentation and adapted it to insert lakeFS in between OpenShift AI and a local instance of MinIO. With this validation complete, the lakeFS team has announced support for running lakeFS on an OpenShift cluster and its integration with OpenShift AI. Be sure to check out the Accelerating AI Innovation with lakeFS and OpenShift AI blog on the lakeFS site.

Follow the instructions on how to get your OpenShift AI environment up with lakeFS and MinIO and perform the fraud detection demo with the changes outlined in the instructions. You’ll get to test pulling data from lakeFS, storing data via lakeFS, saving trained models to lakeFS, pulling models to serve from lakeFS and even exploring how OpenShift AI pipelines use lakeFS for artifact storage.

Happy data versioning!

product trial

Red Hat OpenShift Data Foundation | Product Trial

About the author

Sean has been (back) at Red Hat since 2020 working with strategic Red Hat ecosystem partners to co-create integrated product solutions and get them to market.

Browse by channel

Automation

The latest on IT automation for tech, teams, and environments

Artificial intelligence

Updates on the platforms that free customers to run AI workloads anywhere

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

The latest on how we reduce risks across environments and technologies

Edge computing

Updates on the platforms that simplify operations at the edge

Infrastructure

The latest on the world’s leading enterprise Linux platform

Applications

Inside our solutions to the toughest application challenges

Original shows

Entertaining stories from the makers and leaders in enterprise tech

Products

- Red Hat Enterprise Linux

- Red Hat OpenShift

- Red Hat Ansible Automation Platform

- Cloud services

- See all products

Tools

- Training and certification

- My account

- Customer support

- Developer resources

- Find a partner

- Red Hat Ecosystem Catalog

- Red Hat value calculator

- Documentation

Try, buy, & sell

Communicate

About Red Hat

We’re the world’s leading provider of enterprise open source solutions—including Linux, cloud, container, and Kubernetes. We deliver hardened solutions that make it easier for enterprises to work across platforms and environments, from the core datacenter to the network edge.

Select a language

Red Hat legal and privacy links

- About Red Hat

- Jobs

- Events

- Locations

- Contact Red Hat

- Red Hat Blog

- Diversity, equity, and inclusion

- Cool Stuff Store

- Red Hat Summit