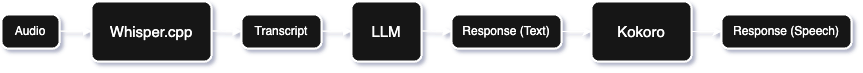

Converse with large language models using speech. DEMO

- Open: Powered by state-of-the-art open-source speech processing models.

- Efficient: Light enough to run on consumer hardware, with low latency.

- Self-hosted: Entire pipeline runs offline, limited only by compute power.

- Modular: Switching LLM providers is as simple as changing an environment variable.

-

For text generation, you can either self-host an LLM using Ollama, or opt for a third-party provider. This can be configured using a .env file in the project root.

-

If you're using Ollama, add the

OLLAMA_MODELvariable to the .env file to specify the model you'd like to use. (Example:OLLAMA_MODEL=deepseek-r1:7b) -

Among the third-party providers, Sage supports the following out of the box:

- Deepseek

- OpenAI

- Anthropic

- Together.ai

-

To use a provider, add a

<PROVIDER>_API_KEYvariable to the .env file. (Example:OPENAI_API_KEY=xxxxxxxxxxxxxxxxxxxxxxx) -

To choose which model should be used for a given provider, use the

<PROVIDER>_MODELvariable. (Example:DEEPSEEK_MODEL=deepseek-chat)

-

-

Next, you have two choices: Run Sage as a Docker container (the easy way) or natively (the hard way). Note that running it with Docker may have a performance penalty (Inference with whisper is 4-5x slower compared to native).

-

With Docker: Install Docker and start the daemon. Download the following files and place them inside a

modelsdirectory at the project root.Run

bun docker-buildto build the image and thenbun docker-runto spin a container. The UI is exposed athttp://localhost:3000. -

Without Docker: Install Bun, Rust, OpenSSL, LLVM, Clang, and CMake. Make sure all of these are accessible via

$PATH. Then, runsetup-unix.shorsetup-win.batdepending on your platform. This will download the required model weights and compile the binaries needed for Sage. Once finished, start the project withbun start. The first run on macOS is slow (~20 minutes on M1 Pro), since the ANE service compiles the Whisper CoreML model to a device-specific format. Next runs are faster.

-

- Make it easier to run (Dockerize?)

- CUDA support

- Allow custom Ollama endpoint

- Multilingual support

- Allow Whisper configuration

- Allow customization of system prompt

- Optimize the pipeline

- Release as a library?