Using Apache Hive with High Performance

- 1. Page1 © Hortonworks Inc. 2014 High Performance Hive Raj Bains, Hive Product Manager Fall 2015

- 2. Page2 © Hortonworks Inc. 2014 Using Hive • Hive Stack • Getting Data into Hive • Data Storage and Layout • Execution Engines • Execution and Queues • Common Query Issues • Appendix • Hive Bucketing • Hive Explain Query Plan

- 3. Page3 © Hortonworks Inc. 2014 MetaStore DB HDFS HiveServer2HiveCLI WebHCatWebHDFS Execution Engines Tez / MapReduce HTTP Client HTTP BI ClientBeeline JDBC / ODBC Oozie Metastore

- 4. Page4 © Hortonworks Inc. 2014 Getting Data into Hive Common Methods

- 5. Page5 © Hortonworks Inc. 2014 cars.csv Hive HDFScars.csv 1. Land data into HDFS cars orc 2. Create external table Ext_table 3. Create ORC Table Hive_table 4. Do Insert.. Select.. into ORC Table Hive Ingestion: Using External Table

- 6. Page6 © Hortonworks Inc. 2014 Commands [rbains@cn105-10 ~]$ head cars.csv Name,Miles_per_Gallon,Cylinders,Displacement,Horsepower,Weigh t_in_lbs,Acceleration,Year,Origin "chevrolet chevelle malibu",18,8,307,130,3504,12,1970-01-01,A "buick skylark 320",15,8,350,165,3693,11.5,1970-01-01,A "plymouth satellite",18,8,318,150,3436,11,1970-01-01,A [rbains@cn105-10 ~]$ hdfs dfs -copyFromLocal cars.csv /user/rbains/visdata [rbains@cn105-10 ~]$ hdfs dfs -ls /user/rbains/visdata Found 1 items -rwxrwxrwx 3 rbains hdfs 22100 2015-08-12 16:16 /user/rbains/visdata/cars.csv CREATE EXTERNAL TABLE IF NOT EXISTS cars( Name STRING, Miles_per_Gallon INT, Cylinders INT, Displacement INT, Horsepower INT, Weight_in_lbs INT, Acceleration DECIMAL, Year DATE, Origin CHAR(1)) COMMENT 'Data about cars from a public database' ROW FORMAT DELIMITED FIELDS TERMINATED BY ',' STORED AS TEXTFILE location '/user/rbains/visdata'; CREATE TABLE IF NOT EXISTS mycars( Name STRING, Miles_per_Gallon INT, Cylinders INT, Displacement INT, Horsepower INT, Weight_in_lbs INT, Acceleration DECIMAL, Year DATE, Origin CHAR(1)) COMMENT 'Data about cars from a public database' ROW FORMAT DELIMITED FIELDS TERMINATED BY ',' STORED AS ORC; INSERT OVERWRITE TABLE mycars SELECT * FROM cars;

- 7. Page7 © Hortonworks Inc. 2014 Source Database HDFSfile file file file map1 map2 map3 map4 1. Specify connection information 2. Specify source data and parallelism (=4 here) 3. Specify destination – HDFS or Hive (prefer HDFS) SQOOP Hive Ingestion: Sqoop

- 8. Page8 © Hortonworks Inc. 2014 Sqoop Examples Connection sqoop import --connect jdbc:mysql://db.foo.com/bar --table EMPLOYEES --columns "employee_id,first_name,last_name,job_title" Getting Incremental Data sqoop import --connect jdbc:mysql://db.foo.com/bar --table EMPLOYEES --where "start_date > '2010-01-01'" sqoop import --connect jdbc:mysql://db.foo.com/bar --table EMPLOYEES --where "id > 100000" --target-dir /incremental_dataset --append Specify Parallelism – Split-by and num-mappers sqoop import --connect jdbc:mysql://db.foo.com/bar --table EMPLOYEES --num-mappers 8 sqoop import --connect jdbc:mysql://db.foo.com/bar --table EMPLOYEES --split-by dept_id Specify SQL Query sqoop import --query 'SELECT a.*, b.* FROM a JOIN b on (a.id == b.id) WHERE $CONDITIONS' --split-by a.id --target-dir /user/foo/joinresults Specify Destination --target-dir /user/foo/joinresults --hive-import Direct Hive import only supports Text, prefer HDFS for other formats

- 9. Page9 © Hortonworks Inc. 2014 Merging Data without SQL Merge - Ingest Base Table as ORC Ingest Base Table sqoop import --connect jdbc:teradata://{host name}/Database=retail --connection-manager org.apache.sqoop.teradata.TeradataConnManager --username dbc --password dbc --table SOURCE_TBL --target-dir /user/hive/base_table -m 1 CREATE TABLE base_table ( id STRING, field1 STRING, modified_date DATE) ROW FORMAT DELIMITED FIELDS TERMINATED BY ',' STORED AS ORC; 1 Ingest Incremental Table sqoop import --connect jdbc:teradata://{host name}/Database=retail --connection-manager org.apache.sqoop.teradata.TeradataConnManager --username dbc --password dbc --table SOURCE_TBL --target-dir /user/hive/incremental_table -m 1 --check-column modified_date --incremental lastmodified --last-value {last_import_date} Ingest CREATE EXTERNAL TABLE incremental_table ( id STRING, field1 STRING, modified_date DATE) ROW FORMAT DELIMITED FIELDS TERMINATED BY ',' STORED AS TEXTFILE location '/user/hive/incremental_table'; Incremental External Table

- 10. Page10 © Hortonworks Inc. 2014 Merging Data without SQL Merge – Reconcile/Merge CREATE A MERGE VIEW CREATE VIEW reconcile_view AS SELECT t1.* FROM (SELECT * FROM base_table UNION ALL SELECT * from incremental_table) t1 JOIN (SELECT id, max(modified_date) max_modified FROM (SELECT * FROM base_table UNION ALL SELECT * from incremental_table) GROUP BY id) t2 ON t1.id = t2.id AND t1.modified_date = t2.max_modified; 2 Reconcile / Merge

- 11. Page11 © Hortonworks Inc. 2014 Merging Data without SQL Merge – Compact/ Delete CREATE A MERGE VIEW DROP TABLE reporting_table; CREATE TABLE reporting_table AS SELECT * FROM reconcile_view; 3 Compact 4 Purge hadoop fs –rm –r /user/hive/incremental_table/* DROP TABLE base_table; CREATE TABLE base_table ( id STRING, field1 STRING, modified_date DATE) ROW FORMAT DELIMITED FIELDS TERMINATED BY ',' STORED AS ORC; INSERT OVERWRITE TABLE base_tabe SELECT * FROM reporting_table;

- 12. Page12 © Hortonworks Inc. 2014 Hive Streaming – Tool Based Ingest Storm Bolt Flume

- 13. Page13 © Hortonworks Inc. 2014 Storing Data In Hive Correct Storage is the key to performance

- 14. Page14 © Hortonworks Inc. 2014 Using Partitions- Why? 1. Primary • Atomicity of Append • Reducing search space for Query 2. Secondary • Reduce space for compactions • Reduce space for updates (partition replacement) Note: Schema evolution is supported on partitions without changing old data, However you cannot modify old partitions if the schema changes Date = 2015-08-15

- 15. Page15 © Hortonworks Inc. 2014 Using Partitions – Number of Partitions for a Query • Time from ingestion to Query • Multiple writes to same partitions causes locking? • Query pattern? Filter to read fewest partitions • Metadata including column stats per partition • MetastoreDB performance • Memory size for Metastore, HiveServer2 (use the right size here, default is 1GB) • Memory size during Execution small large > 10K partitions probably requires a powerful ORACLE RAC system and enough memory on every node Query Pattern – A query reading 10K partitions will take the cluster down • Pick a column with low-medium NDV • Avoid Partitions < 1GB, bigger is better • Nesting can cause too many partitions • Scale: For dates, use partitions that increase scale as data gets older, e.g. 15 minutes, hours, months, year … Anecdotes Advice Factors

- 16. Page16 © Hortonworks Inc. 2014 Bucketing • Better at high NDV • Hash partitioned on a primary number is good • Very difficult to get this right • Use only for very large tables • Get the distribution of data correct • Use the right bucket number • Note: Only use to get joins on bucket key – need to get a few sizes right • Conclusion – Avoid this or have a data scientist figure it out • Ask for Ofer at HWX

- 17. Page17 © Hortonworks Inc. 2014 ORC – Advanced Columnar format Stripes: Indexes and Stats every 10K rows ORC provides three level of indexes within each file: • file level - statistics about the values in each column across the entire file • stripe level - statistics about the values in each column for each stripe • row level - statistics about the values in each column for each set of 10,000 rows within a stripe Read File? File and stripe level column statistics are in the file footer Row level indexes include both the column statistics for each row group and the position for seeking to the start of the row group. Column statistics - count of values, null values present, min and max, sum. As of Hive 1.2, the indexes can include bloom filters.

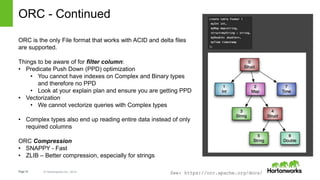

- 18. Page18 © Hortonworks Inc. 2014 ORC - Continued ORC is the only File format that works with ACID and delta files are supported. Things to be aware of for filter column: • Predicate Push Down (PPD) optimization • You cannot have indexes on Complex and Binary types and therefore no PPD • Look at your explain plan and ensure you are getting PPD • Vectorization • We cannot vectorize queries with Complex types • Complex types also end up reading entire data instead of only required columns ORC Compression • SNAPPY - Fast • ZLIB – Better compression, especially for strings See: https://orc.apache.org/docs/

- 19. Page19 © Hortonworks Inc. 2014 Storage – Layout in ETL for your queries SRC DST ETL Query 1. cluster by 2. sort by High NDV Low NDV select .. from .. where id = 5 select .. from .. group by gender Id = 1 Id = 1 Id = 18 Id = 18 Id = 2 Id = 2 Id = 19 Id = 19 5K F 5K M 5K F 5K M

- 20. Page20 © Hortonworks Inc. 2014 Execution Engine For ETL and Interactive workloads

- 21. Page21 © Hortonworks Inc. 2014 Use Tez Execution Engine HDP 2.2 onward Tez is very stable and was made the default in 2.2.4 HDP 2.2.4 onward Tez View is GA and allows you to debug query execution without looking at logs

- 22. Page22 © Hortonworks Inc. 2014 Execution – Containers and Queues HDFS YARN Tez Session AM C C C Tez Session AM C C C C T T i i Queues Containers Running Tasks and Idle containers T Session AMs HiveServer2 HiveServer2 CLI Tez Session AM T T T T T T Holding on to sessions and containers Round robin, DoAs http://docs.hortonworks.com/HDPDocuments/HDP2/HDP-2.3.0/bk_performance_tuning/content/hive_perf_best_pract_better_wkld_mgmt_thru_queues.html

- 23. Page23 © Hortonworks Inc. 2014 Configuring Memory Correctly YARN • System < 16GB RAM, leave 25% for System and rest for YARN containers • System > 16GB RAM, leave 12.5% for System and rest for YARN containers TEZ • Tez Container Size is a multiple of YARN container size. Larger causes wastage & larger mapjoins • For HDP 2.2, Xmx = Xms = 80% of container size • For HDP 2.3, Xmx, Xms not needed • Set TEZ_CONTAINER_MAX_JAVA_HEAP_FRACTION = 0.8 HIVE • join.noconditionaltask.size determines size of mapjoins, recommended to be 33% of Tez container size • reducers.bytes.per.reducer is data per reducer and can determine customer success HiveServer2 Heap 1GB, more for larger #partitions HiveMetastore Heap 1GB, more for larger #partitions

- 24. Page24 © Hortonworks Inc. 2014 Query Compilation and Execution

- 25. Page25 © Hortonworks Inc. 2014 Cost Based Optimizer and Statistics Table level statistics Internal Table - SET hive.stats.autogather=true; External Table - ANALYZE TABLE <table_name> COMPUTE STATISTICS; Prefer internal tables Column level statistics – NDV, Min, Max Gathering not automated ANALYZE TABLE <table_name> COMPUTE STATISTICS for COLUMNS; CBO • Use CBO • SET hive.cbo.enable=true; • SET hive.stats.fetch.column.stats=true; • SET hive.stats.fetch.partition.stats=true; • Helps especially with Joins

- 26. Page26 © Hortonworks Inc. 2014 Query Optimization - Parallelism Number of Mappers • tez.am.grouping.split-waves=1.7 • For a query the number of mappers is 1.7x available containers • The first wave is heavier and second smaller wave of 0.7x usually covers straggler latency • You can change this number (per submitting node) if you believe you’re not getting correct parallelism Number of Reducers • hive.exec.reducers.bytes.per.reducer • This number will decide how much data each reducer processes and therefore how many reducers are needed • This number may be changed if your query is not getting correct parallelism for reducers

- 27. Page27 © Hortonworks Inc. 2014 Query Optimization – Joins • Getting MapJoin instead of Shuffle Join • Map Join will broadcast small table to multiple nodes • Large table will be streamed in parallel • You’ll see a broadcast edge between two table reads • Shuffle Join will shuffle data • Should only happen between two large tables • join.noconditionaltask.size will determine maximum cumulative memory to be used for MapJoins • It should be approximately a third of Tez container size • If you have a lot of possible MapJoins converting into Shuffle joins, increase this number and Tez container size • Getting Incorrect Join Order • Ensure that you’re using CBO and have column stats • If you cannot get the right order from optimizer, write a CTE and factor out the join

- 28. Page28 © Hortonworks Inc. 2014 Getting Unusually High GC times • Sometimes GC time will dominate query time • Use hive.tez.exec.print.summary to see the GC time • Find what is happening in the Vertex that has high GC, some common issues are: • MapJoin – tune down the mapjoins • Insert to ORC – If there are very wide rows with many columns, reduce hive.exec.orc.default.buffer.size or increase the Tez container size • Insert into Partitioned Table – If large number of tasks are writing concurrently to partitions, this can cause memory pressure, enable hive.optimize.sort.dynamic.partition

- 29. Page29 © Hortonworks Inc. 2014 Summary and Roadmap

- 30. Page30 © Hortonworks Inc. 2014 Summary HIVE Getting Data into Hive Storage • Partitioning • Bucketing • ORC File Format • Schema Design for read Execution • Tez • Using YARN Queues • Resource reuse Memory • YARN • Tez • Hive • HiveServer2 • Metastore Query Compilation • CBO and Stats • Parallelism • Debugging common issues

- 31. Page31 © Hortonworks Inc. 2014 Questions ?

- 32. Page32 © Hortonworks Inc. 2014 Appendix – Deep Dives .

- 33. Page33 © Hortonworks Inc. 2014 Hive Bucketing Challenges and deep dive

- 34. Page34 © Hortonworks Inc. 2014 Recap: Storage – layout implications for the queries SRC DST ETL Query 1. cluster by 2. sort by High NDV Low NDV select .. from .. where id = 5 select .. from .. group by gender Id = 1 Id = 1 Id = 18 Id = 18 Id = 2 Id = 2 Id = 19 Id = 19 5K F 5K M 5K F 5K M

- 35. Page35 © Hortonworks Inc. 2014 Hive Bucketing Overview • Basics of Bucketing • Motivation for Bucketing • Challenges with Bucketing • How to choose good bucketing

- 36. Page36 © Hortonworks Inc. 2014 Basics of Bucketing • Declared in DDL • Uses the Java Hash Function to distribute rows across buckets • DataType -> GetHash( ) => Integer % nBuckets • In every Partition (directory) there is exactly one file per Bucket • The number of Buckets are identical across all partitions • There is no ‘number of Buckets’ evolution story • Changing the number of buckets requires reloading the table from scratch

- 37. Page37 © Hortonworks Inc. 2014 Motivation for Bucketing • Self joins • Self joins are very efficient • Conversion to Map Join • Large table is bucketed, small table is distributed by it’s bucket key • SMB Join • Sort Merge Bucket Join • Requires multiple tables bucketed by same key and the number of buckets in one should be a multiple of the other • Rarely used and primarily for PB to TB joins • Requires HDP 2.3 or > HDP 2.2.8 Partition 1 B1 B2 B3 Table Large B1 B2 B3 Table small small_1 small_2 small_3

- 38. Page38 © Hortonworks Inc. 2014 Motivation for Bucketing - ACID • ACID Requires delta file merges • Buckets reduce the scope of these merges making them faster Partition P1 B1 B2 B3 B4 B5 Delta 1 d_B1 d_B2 d_B3 d_B4 d_B5 Delta 2 d_B1 d_B2 d_B3 d_B4 d_B5 Merge Scope

- 39. Page39 © Hortonworks Inc. 2014 Challenges With Bucketing – Data Skew Hash function induced Skew • DataType -> GetHash( ) => Integer % nBuckets • String hashes have high collisions • The hash distribution is not uniform and usually a small subset of characters is used • For example Aa and BB hash to same location • Integer hashes are integers themselves ( 20 => 20 % nBuckets) • Often input has patterns that can lead to a bad distribution (even numbers) Input Data Skew • Input data is often skewed in favor of one or a few values • anonymous is very common in streaming data • user_id 0 is common when user_id is unavailable

- 40. Page40 © Hortonworks Inc. 2014 Challenges with Bucketing – Constant Number • There is one-to-one correspondence between • the number of buckets and the number of files in a partition • As the data size increases or the data distribution pattern changes • it is not possible to change the number of buckets • ETL Speed Concerns • Only one CPU Core writes to a single bucket • A large cluster can get significantly underutilized when the number of buckets is small • Input skew can lead of some very slow processing Partition P1 B1 B2 B3 B4 B5

- 41. Page41 © Hortonworks Inc. 2014 HORTONWORKS CONFIDENTIAL & PROPRIETARY INFORMATION Challenges with Bucketing – Legacy Concerns • Map Reduce support has caused some optimizations to not be done • Map Reduce uses CombineInputFormat implementation that causes: • Bucketed partitions cannot be appended to (without ACID), you can’t just add a file • No static bucket pruning can be done • For SMB, buckets from all partitions are combined first • Seatbelts and Roll Cages • Some customers have turned bucketing off and on causing inconsistent data • Compile time checks ensure bucketing is done correctly, query compile time suffers greatly due to this

- 42. Page42 © Hortonworks Inc. 2014 Using Buckets Effectively (Needed with ACID) • Choose a good bucketing key • Ensure that it has high NDV • Ensure that it has good distribution • Choose a good number of buckets • The number should be high enough to allow enough parallelism on write • The number should be prime (never use 31) • Try to get ORC File sizes of 1GB or more • Smaller files become a single split reducing parallelism • Use exact data types in filters • Do not use conversions when using where clauses on bucket columns • E.g. String and Varchar are hashed differently • List bucketing can help with input skew on one column and works in a very narrow case. But in that very narrow case, you can use it.

- 43. Page43 © Hortonworks Inc. 2014 Hive Explain Plan Understanding your query

- 44. Page44 © Hortonworks Inc. 2014 Query Example – TPC-DS Query 27 SELECT i_item_id, s_state, avg(ss_quantity) agg1, avg(ss_list_price) agg2, avg(ss_coupon_amt) agg3, avg(ss_sales_price) agg4 FROM store_sales, customer_demographics, date_dim, store, item WHERE store_sales.ss_sold_date_sk = date_dim.d_date_sk AND store_sales.ss_item_sk = item.i_item_sk AND store_sales.ss_store_sk = store.s_store_sk AND store_sales.ss_cdemo_sk = customer_demographics.cd_demo_sk AND customer_demographics.cd_gender = 'F’ AND customer_demographics.cd_marital_status = 'D’ AND customer_demographics.cd_education_status = 'Unknown’ AND date_dim.d_year = 1998 AND store.s_state in ('KS','AL', 'MN', 'AL', 'SC', 'VT') GROUP BY i_item_id, s_state ORDER BY i_item_id ,s_state LIMIT 100;

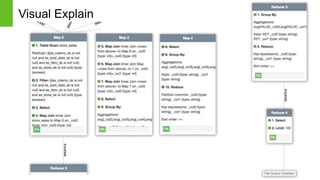

- 45. Page45 © Hortonworks Inc. 2014 Visual Explain

- 46. Page46 © Hortonworks Inc. 2014 Visual Explain

- 47. Page47 © Hortonworks Inc. 2014 Tasks show you parallelism

- 48. Page48 © Hortonworks Inc. 2014 Text Explain - Structure Stage: Stage-1 Tez Edges: Map 2 <- Map 1 (BROADCAST_EDGE), Map 5 (BROADCAST_EDGE), Map 6(BROADCAST_EDGE), Map 7 (BROADCAST_EDGE) Reducer 3 <- Map 2 (SIMPLE_EDGE) Reducer 4 <- Reducer 3 (SIMPLE_EDGE) DagName: hive_20151001122139_c07b3717-ebf5-4d13-acd8-0aa003e275ad:43 Vertices: Map 1 …

- 49. Page49 © Hortonworks Inc. 2014 Text Explain - Snippets Map 1 Map Operator Tree: TableScan alias: item filterExpr: i_item_sk is not null (type: boolean) Statistics: Num rows: 48000 Data size: 68732712 Basic stats: COMPLETE Column stats: COMPLETE Map 2 Map Operator Tree: TableScan alias: store_sales filterExpr: (((ss_cdemo_sk is not null and ss_sold_date_sk is not null) and ss_item_sk is not null) and ss_store_sk is not null) (type: boolean) Statistics: Num rows: 575995635 Data size: 50814502088 Basic stats: COMPLETE Column stats: COMPLETE Filter Operator predicate: (((ss_cdemo_sk is not null and ss_sold_date_sk is not null) and ss_item_sk is not null) and ss_store_sk is not null) (type: boolean) Statistics: Num rows: 501690006 Data size: 15422000508 Basic stats: COMPLETE Column stats: COMPLETE Select Operator expressions: ss_sold_date_sk (type: int), ss_item_sk (type: int), ss_cdemo_sk (type: int), ss_store_sk (type: int), ss_quantity (type: int), ss_list_price (type: float), ss_sales_price (type: float), ss_coupon_amt (type: float) outputColumnNames: _col0, _col1, _col2, _col3, _col4, _col5, _col6, _col7 Statistics: Num rows: 501690006 Data size: 15422000508 Basic stats: COMPLETE Column stats: COMPLETE Map Join Operator condition map: Inner Join 0 to 1 keys: 0 _col2 (type: int) 1 _col0 (type: int) outputColumnNames: _col0, _col1, _col3, _col4, _col5, _col6, _col7 input vertices: 1 Map 5 Statistics: Num rows: 31355626 Data size: 877957528 Basic stats: COMPLETE Column stats: COMPLETE

- 50. Page50 © Hortonworks Inc. 2014 Explain Challenges • Column Lineage is not very good • As you go farther from the initial table read, column names (such as _col0) make less sense • You can track them with lineage – but hard for very large queries • Relic of the old physical optimizer in Hive • In the process of being replaced by CBO which has very good information • Right now CBO runs, followed by physical optimizer • Predicate Pushdown is not shown • Filters with simple data types get pushed down • Only logs (in ATS) show whether predicate was pushed down to ORC layer

Editor's Notes

- #23: When to hold on, how, why Round Robin Do As

![Page6 © Hortonworks Inc. 2014

Commands

[rbains@cn105-10 ~]$ head cars.csv

Name,Miles_per_Gallon,Cylinders,Displacement,Horsepower,Weigh

t_in_lbs,Acceleration,Year,Origin

"chevrolet chevelle malibu",18,8,307,130,3504,12,1970-01-01,A

"buick skylark 320",15,8,350,165,3693,11.5,1970-01-01,A

"plymouth satellite",18,8,318,150,3436,11,1970-01-01,A

[rbains@cn105-10 ~]$ hdfs dfs -copyFromLocal cars.csv

/user/rbains/visdata

[rbains@cn105-10 ~]$ hdfs dfs -ls /user/rbains/visdata

Found 1 items

-rwxrwxrwx 3 rbains hdfs 22100 2015-08-12 16:16

/user/rbains/visdata/cars.csv

CREATE EXTERNAL TABLE IF NOT EXISTS cars(

Name STRING,

Miles_per_Gallon INT,

Cylinders INT,

Displacement INT,

Horsepower INT,

Weight_in_lbs INT,

Acceleration DECIMAL,

Year DATE,

Origin CHAR(1))

COMMENT 'Data about cars from a public database'

ROW FORMAT DELIMITED

FIELDS TERMINATED BY ','

STORED AS TEXTFILE

location '/user/rbains/visdata';

CREATE TABLE IF NOT EXISTS mycars(

Name STRING,

Miles_per_Gallon INT,

Cylinders INT,

Displacement INT,

Horsepower INT,

Weight_in_lbs INT,

Acceleration DECIMAL,

Year DATE,

Origin CHAR(1))

COMMENT 'Data about cars from a public database'

ROW FORMAT DELIMITED

FIELDS TERMINATED BY ','

STORED AS ORC;

INSERT OVERWRITE TABLE mycars SELECT * FROM cars;](https://image.slidesharecdn.com/usinghive-151001173243-lva1-app6892/85/Using-Apache-Hive-with-High-Performance-6-320.jpg)