AI in Data Integration: Definition, Tools and Future

Tahneet Kanwal

Posted On: June 12, 2025

![]() 35898 Views

35898 Views

![]() 10 Min Read

10 Min Read

Data integration is the process that collects data from different sources and structure them into a unified format for analysis and decision making. Using AI in data integration enhances this process by automating tasks such as data extraction, transformation and loading across various systems.

Overview

Artificial Intelligence in data integration is the process of leveraging AI/ML techniques to automate the extraction, transformation, and loading of data across diverse systems.

How AI Enhances Data Integration?

Here are the steps involved in the data integration process where AI plays a key role:

- Field Mapping and Schema Matching: It analyzes field names, data types, and context to align source and target schemas automatically.

- Data Quality & Anomaly Detection: It detects and resolves duplicates, missing values, and outliers by learning normal patterns and flagging unusual entries.

- Entity Resolution: It links records referring to the same real-world entity, even with inconsistencies in naming or formatting.

- Workflow Optimization: It recommends the best data flow sequence and adjusts pipelines in real time to avoid delays or failures.

- Metadata Management and Governance: It generates metadata, identifies sensitive data, and enforces compliance policies.

AI Tools/Platforms for Data Integration

- Informatica CLAIRE

- IBM Watson Knowledge Catalog

- Talend Data Fabric

- SnapLogic Iris

What Is AI in Data Integration?

AI in data integration is the process of using artificial intelligence and machine learning techniques to enhance the steps of data extraction, transformation, load across different systems. It includes databases, APIs, cloud platforms, and file repositories.

This technique focuses on augmenting or automating manual steps of data integration with AI-driven tools while also handling complex or unstructured data formats.

Why Need AI for Data Integration?

Here are some key challenges in traditional data integration that highlight the need for AI to improve accuracy, scalability, and efficiency:

- Data Exists in Multiple Formats: Organizations work with data from relational databases, NoSQL systems, CSV files, APIs, and real-time feeds. These sources differ in structure and standards, which leads to major compatibility issues.

- Field Names and Data Types Often Mismatch: Databases may use different names and formats for the same information. Aligning these fields requires extra effort and increases the risk of mistakes.

- Data Remains Trapped in Silos: Departments manage their own databases with little coordination. This isolation makes it challenging to build a complete and unified view of the business.

- Manual Field Mapping Creates Delays: Teams need to map each field manually and write scripts for data conversion. This slows down progress and makes the integration process hard to maintain.

- Data Quality Issues Appear After Integration: Once data is integrated, issues like duplicates, missing entries, and outdated values often surface. Rule-based cleanup tools may fail to detect less obvious issues.

- Traditional Pipelines Fail to Scale: Older batch processes often struggle with high data volume and speed. These systems were not designed to support real-time or near real-time data demands.

- Lack of Built-In Security and Governance: Many traditional tools offer limited access control and tracking. This makes it harder to manage sensitive data and comply with privacy regulations.

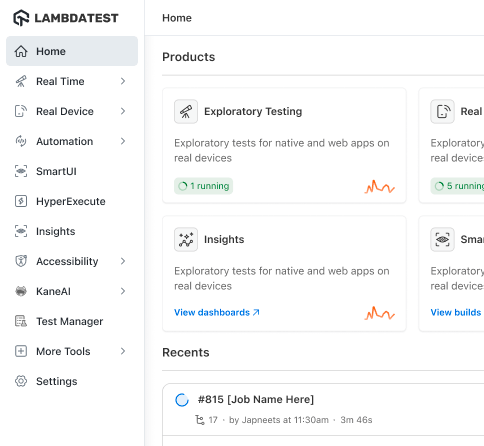

Perform data-driven testing across 3000+ environments. Try LambdaTest Now!

How AI Augments Data Integration?

Here is how AI helps in each stage of the data integration process:

- Field Mapping and Schema Matching: It automatically matches fields between databases by analyzing names, data types, and context. It reduces manual mapping work and improves accuracy in aligning source and target schemas.

- Data Quality and Anomaly Detection: It identifies and corrects errors, duplicates, and inconsistencies. It learns typical data patterns and flags unusual entries for review, improving overall data quality.

- Entity Resolution: It matches records that refer to the same real-world entity, even with variations in names or addresses. It uses multiple data points to merge duplicates and create unified records.

- Workflow Optimization: It recommends the best sequence for data processing and can adjust pipelines in real time to avoid failures or delays. It improves efficiency and ensures timely data delivery.

- Metadata Management and Governance: It auto-generates metadata, classifies sensitive data, and applies compliance rules. It enhances searchability and ensures integrated data is well-organized and secure.

AI Tools and Platforms Used for Data Integration

Here are some of the AI tools and platforms used for data integration:

- Informatica CLAIRE: CLAIRE is Informatica’s AI engine that automates data integration, quality checks, and schema mapping. It learns from metadata and user actions to suggest improvements. CLAIRE GPT enables natural language interaction, helping non-technical users find and manage data.

- IBM Watson Knowledge Catalog: Watson Knowledge Catalog by IBM uses AI to classify, enrich, and govern data during integration. It auto-tags assets, identifies sensitive fields, and applies policies. It enhances data trust, governance, and discovery-ideal for enterprises prioritizing compliance.

- Talend Data Fabric: Talend Data Fabric, powered by Qlik, uses AI to automate integration workflows. It suggests data mappings, detects anomalies, and assesses data quality via a Trust Score. This platform also supports no-code pipelines, making it accessible to both technical and non-technical users.

- SnapLogic Iris: SnapLogic’s Iris AI assistant helps build integration pipelines by recommending next steps based on previous patterns. It supports natural language via chat and help reduces errors and development time for teams seeking intuitive, code-free, AI-driven integration.

Real-World Examples of AI in Data Integration

Let’s look at some real world examples where AI is used in data integration.

- Technology: Tech Mahindra built a system where AI takes care of mapping data, checking for errors, and handling data from various places. This helps organizations integrate their sales, finance, and customer data more easily. It also makes their data ready for reports and dashboards without a lot of manual work.

- Healthcare: Highmark Health started using an AI-based data system. Before this, things like matching patient records or checking data quality were done manually. Now, AI does most of it. It saves time, and there are fewer mistakes. AI helped their data team automate everything in their data process.

Challenges of AI in Data Integration

AI can improve data integration, but it also brings some challenges. Privacy concerns, technical complexity, and the risk of inaccurate results are common in real-world use.

- Inaccuracy and Bias: AI models reflect the quality of their training data. If that data is biased or incomplete, the results will be too. It can lead to incorrect data mappings and silent errors.

- Deep Technical Expertise: AI integration isn’t simple. It needs deep AI-based skills, robust infrastructure, and tools. Without the right expertise, infrastructure, and tools, the integration may fail, produce inaccurate results, or become difficult to maintain.

- Privacy and Security Risks: AI tools often process sensitive data. Using cloud-based AI tools can violate privacy laws if not managed properly.

Future of AI Data Integration

Here are key trends shaping the future of using AI in data integration process:

- More Smarter Integration Workflows: AI will increasingly handle complex tasks like schema mapping, entity resolution, and anomaly detection, reducing the need for manual coding.

- Real-Time Processing: AI will make real-time data integration more reliable, helping organizations process and sync live data streams instantly.

- Predictive Capabilities: AI will begin to predict the best integration paths, transformation logic, or data cleaning steps based on past patterns and context, speeding up the integration lifecycle.

Conclusion

Artificial intelligence is changing how data integration works by making traditional methods faster and more accurate while introducing new ways to manage data.

With advancements in AI, you can expect faster and more accurate data processing in the future. AI will continue to improve data security, support real-time analysis, and simplify integration across different platforms. As AI tools develop further, they will help teams manage data more effectively and adapt to changing requirements.

Frequently Asked Questions (FAQs)

How is integration used in AI?

Integration in AI means adding artificial intelligence into systems and tools to make them work better. Instead of keeping AI separate, it becomes part of the setup, helping with things like learning from data, automating tasks, and making decisions.

What is an example of data integration?

A good example is an organization combining data from marketing, customer support, user activity, and finance into one system. This lets them track how customers behave, send personalized emails, fix support problems, and manage costs all in one place, making everything run smoother.

How is AI used in data?

AI is used to gather, sort, and clean data automatically. It finds patterns, fixes errors like duplicates, and predicts what might happen next. It helps businesses handle big datasets, make smarter choices, and get useful insights without needing to do everything by hand.

How does AI handle unstructured or semi-structured data?

AI uses NLP and machine learning to extract and standardize data from text, images, and PDFs, making the integration of non-tabular formats seamless.

Can AI support real-time data sync across cloud and on-prem systems?

Yes. AI enables real-time sync using stream processing and adaptive algorithms, ensuring data stays consistent across hybrid environments.

How does AI ensure data quality and compliance?

AI cleans data automatically, and flags sensitive information for compliance, reducing manual effort and improving accuracy.

Citations

- Artificial Intelligence in Data Integration: https://iaeme.com/MasterAdmin/Journal_uploads/IJETR/VOLUME_9_ISSUE_2

Got Questions? Drop them on LambdaTest Community. Visit now