What are Vector Embeddings?

Last Updated :

03 Jun, 2025

Vector embedding are digital fingerprints or numerical representations of words or other pieces of data. Each object is transformed into a list of numbers called a vector. These vectors captures properties of the object in a more manageable and understandable form for machine learning models.

Vector embedding

Vector embeddingHere, each object is transformed into a numerical vector using an embedding model. These vectors are capturing features and relationships.

What are Vectors?

- A vector is a one dimensional array of numbers containing multiple scalars of the same type of data.

- Vectors represents properties, features in a more machine understandable way.

- Let's take an example to represent vectors: representation of vectors

Python

import numpy as np

vector_sales= np.array([0.4, 0.3, 0.8])

vector_cost= np.array([0.2, 0.3, 0.1])

vector_prices= np.array([0.9, 0.8, 0.7])

Why do we use Vector embedding?

- Compare Similarities: Vector embeddings quantify semantic similarity by measuring the distance between word vectors, if two vectors are in the same direction they are considered similar.

- Clustering: Machine learning algorithms like k means clustering or DBSCAN can be applied directly to vector embeddings to group related data.

- Perform Arithmetic operations: Vector embeddings can perform mathematical operations. These operations allow embeddings to encode rich structured relationships between entities and are used in knowledge representation, analogy solving tasks and AI reasoning.

- Feed machine understandable data: Vector embeddings convert complex and unstructured data into one dimensional arrays called vectors. These vectors can then be used for various applications.

Types of Vector embeddings

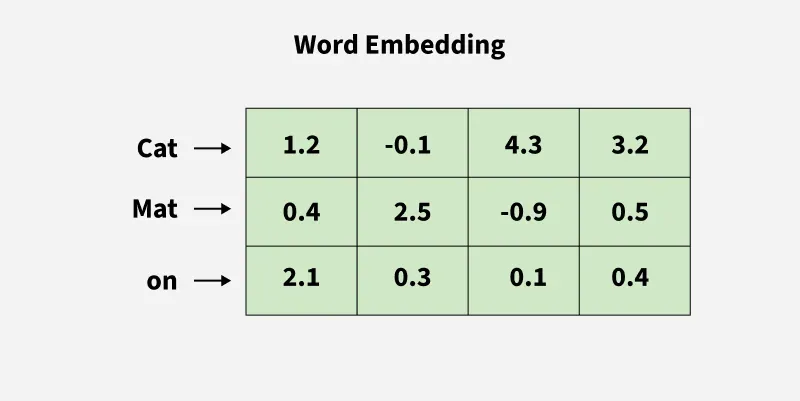

1. Word embedding

- Word embeddings captures not only the semantic meaning of words but also their contextual relationship to other words which help them to classify similarities and cluster different points based on their properties and features.

- For example:

Word embedding

Word embeddingIn this image, each word is transformed into numeric vectors and similar words have closer vector representations allowing models to understand relationship between them.

2. Sentence embedding

- Sentence embeddings aims at finding the semantic meaning of entire phrases or sentences rather than individual words. They are generated with SBERT or other variants of sentence transformers.

- For example:

Sentence embedding

Sentence embeddingIn this image, each word of the sentence is transformed into numeric vectors and zero is imparted to words which are not present in the sentence.

3. Image embedding

- Image embeddings transforms images into numerical representations through which our model can perform image search, object recognition, and image generation.

- For example:

Image embedding

Image embeddingIn this image, image is converted into numerical vectors. Image is divided into grids then we have represented each part using pixel values.

4. Multimodal embedding

- Multimodal embedding combines different types of data models into a shared embedding space.

- For example:

Multimodal embedding

Multimodal embeddingIn this image, data from different sources is processed by a shared embedded model which converts all modalities into numerical vectors.

How do Vector embedding work?

Let us take an example of Word embedding to understand how vectors are generated by taking emotions. Here we are transforming each emoji into a vector and the conditions will be our features. For more in depth

Word embedding

Word embedding For more explanation on word embeddings please refer: Word embedding in NLP

Applications

- Image embeddings transforms images into numerical representations to perform image search, object recognition, and image generation.

- Product embeddings gives personalized recommendations in the field of e-commerce by finding similar products based on user preferences and purchase history.

- Audio embeddings can be used to transform audio data into embeddings to revolutionize music discovery and speech recognition.

- Time series data can be converted into embeddings to uncover hidden patterns and make accurate predictions.

- Graph data like social networks can be represented as vectors to analyze complex relationships and extract valuable features.

- Document embeddings can be used to transform documents into embeddings that can be used in power efficient search engines.

Similar Reads

What is Text Embedding? Text embeddings are vector representations of text that map the original text into a mathematical space where words or sentences with similar meanings are located near each other. Unlike traditional one-hot encoding, where each word is represented as a sparse vector with a single '1' for the corresp

5 min read

What are Embedding in Machine Learning? In recent years, embeddings have emerged as a core idea in machine learning, revolutionizing the way we represent and understand data. In this article, we delve into the world of embeddings, exploring their importance, applications, and the underlying techniques used to generate them. Table of Conte

15+ min read

Word Embedding in Pytorch Word Embedding is a powerful concept that helps in solving most of the natural language processing problems. As the machine doesn't understand raw text, we need to transform that into numerical data to perform various operations. The most basic approach is to assign words/ letters a vector that is u

9 min read

Word Embedding using Word2Vec In this article, we are going to see Pre-trained Word embedding using Word2Vec in NLP models using Python. What is Word Embedding?Word Embedding is a language modeling technique for mapping words to vectors of real numbers. It represents words or phrases in vector space with several dimensions. Word

7 min read

Word Embeddings in NLP Word Embeddings are numeric representations of words in a lower-dimensional space, that capture semantic and syntactic information. They play a important role in Natural Language Processing (NLP) tasks. Here, we'll discuss some traditional and neural approaches used to implement Word Embeddings, suc

14 min read