Top 6 Machine Learning Classification Algorithms

Last Updated :

04 Sep, 2024

Are you navigating the complex world of machine learning and looking for the most efficient algorithms for classification tasks? Look no further. Understanding the intricacies of Machine Learning Classification Algorithms is essential for professionals aiming to find effective solutions across diverse fields. The Top 6 machine learning algorithms for classification designed for categorization are examined in this article. We hope to explore the complexities of these algorithms to reveal their uses and show how they may be applied as powerful instruments to solve practical issues.

Machine Learning Algorithms

Machine Learning AlgorithmsEach Machine Learning Algorithm for Classification, whether it's the high-dimensional prowess of Support Vector Machines, the straightforward structure of Decision Trees, or the user-friendly nature of Logistic Regression, offers unique benefits tailored to specific challenges. Whether you're dealing with Supervised, Unsupervised, or Reinforcement Learning, understanding these methodologies is key to leveraging their power in real-world scenarios.

What is Classification in Machine Learning?

Classification in machine learning is a type of supervised learning approach where the goal is to predict the category or class of an instance that are based on its features. In classification it involves training model ona dataset that have instances or observations that are already labeled with Classes and then using that model to classify new, and unseen instances into one of the predefined categories.

List of Machine Learning Classification Algorithms

Classification algorithms organize and understand complex datasets in machine learning. These algorithms are essential for categorizing data into classes or labels, automating decision-making and pattern identification. Classification algorithms are often used to detect email spam by analyzing email content. These algorithms enable machines to quickly recognize spam trends and make real-time judgments, improving email security.

Some of the top-ranked machine learning algorithms for Classification are:

- Logistic Regression

- Decision Tree

- Random Forest

- Support Vector Machine (SVM)

- Naive Bayes

- K-Nearest Neighbors (KNN)

Let us see about each of them one by one:

1. Logistic Regression Classification Algorithm in Machine Learning

In Logistic regression is classification algorithm used to estimate discrete values, typically binary, such as 0 and 1, yes or no. It predicts the probability of an instance belonging to a class that makes it essectial for binary classification problems like spam detection or diagnosing disease.

Logistic functions are ideal for classification problems since their output is between 0 and 1. Many fields employ it because of its simplicity, interpretability, and efficiency. Logistic Regression works well when features and event probability are linear. Logistic Regression used for binary classification tasks. Logistic regression is used for binary categorization. Despite its name, it predicts class membership likelihood. A logistic function models probability in this linear model.

Logistic Regression (Graph)

Logistic Regression (Graph)Features of Logistic Regression

- Binary Outcome: Logistic regression is used when the dependent variable is binary in nature, meaning it has only two possible outcomes (e.g., yes/no, 0/1, true/false).

- Probabilistic Results: It predicts the probability of the occurrence of an event by fitting data to a logistic function. The output is a value between 0 and 1, which represents the probability that a given input belongs to the '1' category.

- Odds Ratio: It estimates the odds ratio in the presence of more than one explanatory variable. The odds ratio can be used to understand the strength of the association between the independent variables and the dependent binary variable.

- Logit Function: Logistic regression uses the logit function (or logistic function) to model the data. The logit function is an S-shaped curve that can take any real-valued number and map it into a value between 0 and 1.

2. Decision Tree

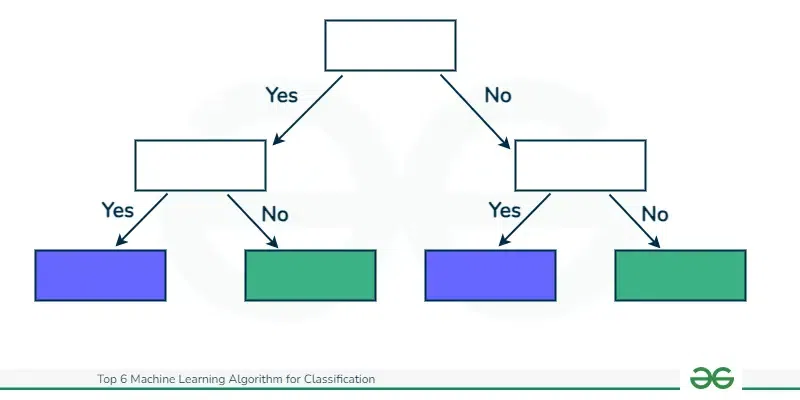

Decision Trees are versatile and simple classification and regression techniques. Recursively splitting the dataset into key-criteria subgroups provides a tree-like structure. Judgments at each node produce leaf nodes. Decision trees are easy to understand and depict, making them useful for decision-making. Overfitting may occur, therefore trimming improves generality. A tree-like model of decisions and their consequences, including chance event outcomes, resource costs and utility.

The algorithm used for both classification and regression tasks. They model decisions and their possible results as tree, with branches representing choices and leaves representing outcomes.

Decision Tree

Decision TreeFeatures of Decision Tree

- Tree-Like Structure: Decision Trees have a flowchart-like structure, where each internal node represents a "test" on an attribute, each branch represents the outcome of the test, and each leaf node represents a class label (decision taken after computing all attributes). The paths from root to leaf represent classification rules.

- Simple to Understand and Interpret: One of the main advantages of Decision Trees is their simplicity and ease of interpretation. They can be visualized, which makes it easy to understand how decisions are made and explain the reasoning behind predictions.

- Versatility: Decision Trees can handle both numerical and categorical data and can be used for both regression and classification tasks, making them versatile across different types of data and problems.

- Feature Importance: Decision Trees inherently perform feature selection, giving insights into the most significant variables for making the predictions. The top nodes in a tree are the most important features, providing a straightforward way to identify critical variables.

3. Random Forest

Random forest are an ensemble learning techniques that combines multiple decision trees to improve predictive accuracy and control over-fitting. By aggregating the predictions of numerous trees, Random Forests enhance the decision-making process, making them robust against noise and bias.

Random Forest uses numerous decision trees to increase prediction accuracy and reduce overfitting. It constructs many trees and integrates their predictions to create a reliable model. Diversity is added by using a random dataset and characteristics in each tree. Random Forests excel at high-dimensional data, feature importance metrics, and overfitting resistance. Many fields use them for classification and regression.

Random Forest

Random Forest Features of Random Forest

- Ensemble Method: Random Forest uses the ensemble learning technique, where multiple learners (decision trees, in this case) are trained to solve the same problem and combined to get better results. The ensemble approach improves the model's accuracy and robustness.

- Handling Both Types of Data: It can handle both categorical and continuous input and output variables, making it versatile for different types of data.

- Reduction in Overfitting: By averaging multiple trees, Random Forest reduces the risk of overfitting, making the model more generalizable than a single decision tree.

- Handling Missing Values: Random Forest can handle missing values. When it encounters a missing value in a variable, it can use the median for numerical variables or the mode for categorical variables of all samples reaching the node where the missing value is encountered.

4.Support Vector Machine (SVM)

SVM is an effective classification and regression algorithm. It seeks the hyperplane that best classifies data while increasing the margin. SVM works well in high-dimensional areas and handles nonlinear feature interactions with its kernel technique. It is powerful classification algorithm known for their accuracy in high-dimensional spaces

SVM is robust against overfitting and generalizes well to different datasets. It finds applications in image recognition, text classification, and bioinformatics, among other fields. Its use cases span image recognition, text categorization, and bioinformatics, where precision is paramount.

Support Vector Machine

Support Vector MachineFeature of Support Vector Machine

- Margin Maximization: SVM aims to find the hyperplane that separates different classes in the feature space with the maximum margin. The margin is defined as the distance between the hyperplane and the nearest data points from each class, known as support vectors. Maximizing this margin increases the model's robustness and its ability to generalize well to unseen data.

- Support Vectors: The algorithm is named after these support vectors, which are the critical elements of the training dataset. The position of the hyperplane is determined based on these support vectors, making SVMs relatively memory efficient since only the support vectors are needed to define the model.

- Kernel Trick: One of the most powerful features of SVM is its use of kernels, which allows the algorithm to operate in a higher-dimensional space without explicitly computing the coordinates of the data in that space. This makes it possible to handle non-linearly separable data by applying linear separation in this higher-dimensional feature space.

- Versatility: Through the choice of the kernel function (linear, polynomial, radial basis function (RBF), sigmoid, etc.), SVM can be adapted to solve a wide range of problems, including those with complex, non-linear decision boundaries.

5.Naive Bayes

Text categorization and spam filtering benefit from Bayes theorem-based probabilistic classification algorithm Naive Bayes. Despite its simplicity and "naive" assumption of feature independence, Naive Bayes often works well in practice. It uses conditional probabilities of features to calculate the class likelihood of an instance. Naive Bayes handles high-dimensional datasets quickly.

Naive Bayes which describes the probability of an event, based on prior knowledge of conditions that might be related to the event. Naive Bayes classifiers assume that the presence (or absence) of a particular feature of a class is unrelated to the presence (or absence) of any other feature, given the class variable

Features of Naive Bayes

- Probabilistic Foundation: Naive Bayes classifiers apply Bayes' theorem to compute the probability that a given instance belongs to a particular class, making decisions based on the posterior probabilities.

- Feature Independence: The algorithm assumes that the features used to predict the class are independent of each other given the class. This assumption, although naive and often violated in real-world data, simplifies the computation and is surprisingly effective in practice.

- Efficiency: Naive Bayes classifiers are highly efficient, requiring a small amount of training data to estimate the necessary parameters (probabilities) for classification.

- Easy to Implement and Understand: The algorithm is straightforward to implement and interpret, making it accessible for beginners in machine learning. It provides a good starting point for classification tasks.

6.K-Nearest Neighbors (KNN)

KNN uses the majority class of k-nearest neighbours for easy and adaptive classification and regression. Non-parametric KNN has no data distribution assumptions. It works best with uneven decision boundaries and performs well for varied jobs. K-Nearest Neighbors (KNN) is an instance-based, or lazy learning algorithm, where the function is only approximated locally, and all computation is deferred until function evaluation. It classifies new cases based on a similarity measure (e.g., distance functions). KNN is widely used in recommendation systems, anomaly detection, and pattern recognition due to its simplicity and effectiveness in handling non-linear data.

K-Nearest Algorithm

K-Nearest Algorithm Fetures of K-Nearest Neighbors (KNN)

- Instance-Based Learning: KNN is a type of instance-based or lazy learning algorithm, meaning it does not explicitly learn a model. Instead, it memorizes the training dataset and uses it to make predictions.

- Simplicity: One of the main advantages of KNN is its simplicity. The algorithm is straightforward to understand and easy to implement, requiring no training phase in the traditional sense.

- Non-Parametric: KNN is a non-parametric method, meaning it makes no underlying assumptions about the distribution of the data. This flexibility allows it to be used in a wide variety of situations, including those where the data distribution is unknown or non-standard.

- Flexibility in Distance Choice: The algorithm's performance can be significantly influenced by the choice of distance metric (e.g., Euclidean, Manhattan, Minkowski). This flexibility allows for customization based on the specific characteristics of the data.

Comparison of Top Machine Learning Classification Algorithms

The top 6 Machine Learning Algorithms for Classification are compared in this table:

| Feature | Decision Tree | Random Forest | Naive Bayes | Support Vector Machines (SVM) | K-Nearest Neighbors (KNN) | Gradient Boosting |

|---|

| Type | Tree-based model | Ensemble Learning (Bagging model) | Probabilistic model | Margin-based model | Instance-based model | Ensemble Learning (Boosting model) |

|---|

| Output | Categorical or Continuous | Categorical or Continuous | Categorical | Categorical or Continuous | Categorical | Categorical or Continuous |

|---|

| Assumptions | Minimal | Similar to Decision Tree, but assumes that a combination of models improves accuracy | Assumes feature independence | Assumes data is separable in a high-dimensional space | Assumes similar instances lead to similar outcomes | Assumes weak learners can be improved sequentially |

|---|

| Strengths | Simple, interpretable, handles both numerical and categorical data | Handles overfitting better than Decision Trees, good for large datasets | Efficient, works well with high-dimensional data | Effective in high-dimensional spaces, versatile | Simple, effective for small datasets, no model training required | Reduces bias and variance, good for complex datasets |

|---|

| Weaknesses | Prone to overfitting, not ideal for very large datasets | More complex and computationally intensive than Decision Trees | Simplistic assumption can limit performance on complex problems | Can be memory intensive, difficult to interpret | Sensitive to the scale of the data and irrelevant features | Can be prone to overfitting, computationally intensive |

|---|

| Use Cases | Classification and regression tasks, feature importance analysis | Large datasets with high dimensionality, classification and regression tasks | Text classification, spam filtering, sentiment analysis | Image recognition, text categorization, bioinformatics | Recommendation systems, anomaly detection, pattern recognition | Web search ranking, credit risk analysis, fraud detection |

|---|

| Training Time | Fast | Slower than Decision Tree due to ensemble method | Very fast | Medium to high, depending on kernel choice | Fast for small datasets, slow for large datasets | Slow, due to sequential model building |

|---|

| Interpretability | High | Medium (due to ensemble nature) | High (simple probabilistic model) | Low (complex transformations) | High | Medium |

|---|

Choosing the Right Algorithm for Your Data

The problem and dataset must be considered before choosing a machine learning algorithm. Each algorithm has strengths and works for different data and problems.

- Decision Trees and Random Forests for their versatility and ease of use.

- Logistic Regression for binary classification problems.

- Support Vector Machines for high-dimensional data.

- K-Nearest Neighbors for its simplicity and effectiveness in smaller datasets.

- Deep Learning models, like CNNs for image classification and RNNs for sequence data, although more complex, are powerful for high-dimensional data and complex problem spaces.

Conclusion

Classification methods from machine learning have transformed difficult data analysis. For classification, this article examined the top six machine learning algorithms: Decision Tree, Random Forest, Naive Bayes, Support Vector Machines, K-Nearest Neighbors, and Gradient Boosting. Each algorithm is useful for different categorization issues due to its distinct properties and applications. Understanding these algorithms' strengths and drawbacks helps data scientists and practitioners solve real-world classification problems.

Similar Reads

How To Use Classification Machine Learning Algorithms in Weka ?

Weka tool is an open-source tool developed by students of Waikato university which stands for Waikato Environment for Knowledge Analysis having all inbuilt machine learning algorithms. It is used for solving real-life problems using data mining techniques. The tool was developed using the Java progr

3 min read

First-Order algorithms in machine learning

First-order algorithms are a cornerstone of optimization in machine learning, particularly for training models and minimizing loss functions. These algorithms are essential for adjusting model parameters to improve performance and accuracy. This article delves into the technical aspects of first-ord

7 min read

Machine Learning Algorithms Cheat Sheet

Machine Learning Algorithms are a set of rules that help systems learn and make decisions without giving explicit instructions. They analyze data to find patterns and hidden relationships. And using this information, they make predictions on new data and help solve problems. This cheatsheet will cov

4 min read

Tree Based Machine Learning Algorithms

Tree-based algorithms are a fundamental component of machine learning, offering intuitive decision-making processes akin to human reasoning. These algorithms construct decision trees, where each branch represents a decision based on features, ultimately leading to a prediction or classification. By

14 min read

Machine Learning Algorithms for 2D Data?

Answer: Common ML algorithms for 2D data include K-Nearest Neighbours, Support Vector Machines, Decision Trees, and Convolutional Neural Networks. Machine learning algorithms have a broad range of applications, including the analysis of 2D data, which is common in fields like image processing and sp

1 min read

Top Machine Learning Certifications in 2025

Machine learning is a critical skill in today’s tech-driven world, affecting sectors such as healthcare, finance, retail, and others. As organizations depend more on artificial intelligence (AI) to solve complex problems, the need for machine learning professionals is skyrocketing. For those looking

9 min read

Random Forest Algorithm in Machine Learning

A Random Forest is a collection of decision trees that work together to make predictions. In this article, we'll explain how the Random Forest algorithm works and how to use it. Understanding Intuition for Random Forest AlgorithmRandom Forest algorithm is a powerful tree learning technique in Machin

7 min read

Machine Learning Algorithms

Machine learning algorithms are essentially sets of instructions that allow computers to learn from data, make predictions, and improve their performance over time without being explicitly programmed. Machine learning algorithms are broadly categorized into three types: Supervised Learning: Algorith

8 min read

CART (Classification And Regression Tree) in Machine Learning

CART( Classification And Regression Trees) is a variation of the decision tree algorithm. It can handle both classification and regression tasks. Scikit-Learn uses the Classification And Regression Tree (CART) algorithm to train Decision Trees (also called “growing†trees). CART was first produced b

11 min read

How to Choose Right Machine Learning Algorithm?

Machine Learning is the field of study that gives computers the capability to learn without being explicitly programmed. ML is one of the most exciting technologies that one would have ever come across. A machine-learning algorithm is a program with a particular manner of altering its own parameters

4 min read