Reducible Error vs Irreducible Error in Machine Learning

Last Updated :

23 Jul, 2025

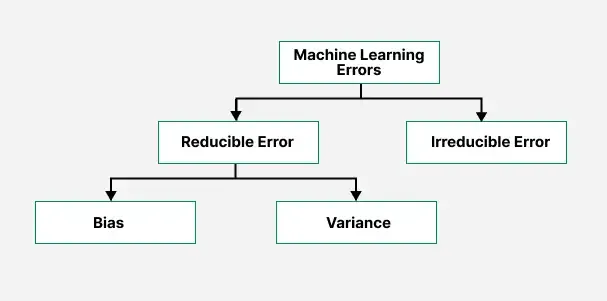

There are two types of errors in machine learning model. They are reducible and irreducible error. We cannot reduce the irreducible error as it is caused by factors that are beyond our control, but we can reduce the reducible error since it arises from factors that we can manage or improve.

Machine Learning Error

Machine Learning Error

Total Error that occur when a model do prediction is:

Total Error = Bias2 + Variance + Irreducible Error

Irreducible Error

Irreducible error is the error in a machine learning model that cannot be reduced, as it is due to unknown or uncontrollable factors. Irreducible error is caused by unknown or uncontrollable variables and is inherent in the data.

y=f(x)+\varepsilon

- Y: The actual outcome you want to predict.

- X: The input features.

- f(X): The true underlying relationship between x and y.

- \varepsilon: The noise or irreducible error randomness in data that can’t be predicted or removed.

Irreducible Error

Irreducible ErrorThe graph shows that even if we build the perfect model to predict house prices based on size, the predictions will never be 100% accurate. That’s because there are always some things we just can’t account for like sudden market changes, noise in the data, or hidden factors like the house’s condition or neighborhood vibe. These unpredictable influences are called irreducible error.

Reducible error

Reducible error is the error in a machine learning model that can be reduced, as it is due to known or controllable factors. Reducible error is caused by known and controllable variables. Reducible errors are caused by bias and variance in the model's predictions, and they can be minimized by improving the model's complexity, using better algorithms, or tuning hyperparameters.

Reducible Error (\varepsilon) = Y - f(X)

Reducible Error

Reducible Error

In machine learning, reducible and irreducible error are two main component of total error prediction. Reducible error arises from issues like high bias high variance and can be minimized by improving the model, tuning hyperparameters, or selecting better features. In contrast, irreducible error is caused by unknown, random, or uncontrollable factors such as noise in data, measurement errors, or hidden variables that no model can predict, no matter how advanced.

Comparison Between Irreducible Error and Reducible Error

Irreducible Error | Reducible Error |

|---|

Error that cannot be reduced by improving the model's data. | Error that can be reduced by improving the model's data. |

|---|

Due to random noise or unmeasurable variables in the data. | Due to bias and variance in the model. |

|---|

Cannot be controlled. | Can be controlled by techniques like model tuning, feature engineering, etc. |

|---|

Sets a lower bound on the total error achievable. | Affects how well the model can learn from data. |

|---|

Related Articles

Similar Reads

Rule Engine vs Machine Learning? Answer: Rule engines use predefined logic to make decisions, while machine learning algorithms learn from data to make predictions or decisions.Rule engines and machine learning represent two fundamentally different approaches to decision-making and prediction in computer systems. While rule engines

2 min read

What is Inductive Bias in Machine Learning? In the realm of machine learning, the concept of inductive bias plays a pivotal role in shaping how algorithms learn from data and make predictions. It serves as a guiding principle that helps algorithms generalize from the training data to unseen data, ultimately influencing their performance and d

5 min read

50 Machine Learning Terms Explained Machine Learning has become an integral part of modern technology, driving advancements in everything from personalized recommendations to autonomous systems. As the field evolves rapidly, it’s essential to grasp the foundational terms and concepts that underpin machine learning systems. Understandi

8 min read

How to handle Noise in Machine learning? Random or irrelevant data that intervene in learning's is termed as noise. What is noise?In Machine Learning, random or irrelevant data can result in unpredictable situations that are different from what we expected, which is known as noise. It results from inaccurate measurements, inaccurate data c

5 min read

K- Fold Cross Validation in Machine Learning K-Fold Cross Validation is a statistical technique to measure the performance of a machine learning model by dividing the dataset into K subsets of equal size (folds). The model is trained on K − 1 folds and tested on the last fold. This process is repeated K times, with each fold being used as the

4 min read

Evaluation Metrics in Machine Learning When building machine learning models, it’s important to understand how well they perform. Evaluation metrics help us to measure the effectiveness of our models. Whether we are solving a classification problem, predicting continuous values or clustering data, selecting the right evaluation metric al

9 min read