Kernels (Filters) in convolutional neural network

Last Updated :

03 May, 2025

Convolutional Neural Networks (CNNs) are neural networks used for processing image data. Kernels also known as filters are an important part of CNNs which helps them to extract important features from images such as edges, textures and patterns. In this article, we will see more about kernels.

Understanding Kernels

Kernels are small matrices used for feature extraction from input data such as images. Each kernel is designed to detect specific features which allows CNN to understand and interpret content of the image. Values of kernels are learned during training process using backpropagation that updates the kernel values to better detect important features of image. This helps CNN in tasks like image classification, object detection and other computer vision tasks by recognizing patterns in different ways.

1. Function of Kernels

Kernels slide over the input data like a image and helps in performing element-wise multiplication followed by a summation of the results. This process extracts specific features from the input such as edges, corners or textures which depends on the kernel’s values.

2. Kernel Structure

- Size: Kernels are usually small like 3x3, 5x5 or 7x7 matrices which is very small as compared to the size of input data. Size of kernel affects how much input data is considered at one time for any given feature extraction operation.

- Depth: Depth of a kernel depends on the depth of the input volume. For example if the input data is a color image with three color channels (RGB) the kernel will also have three channels.

3. Learning Process

Values in kernels are not predetermined but are learned during the training process through backpropagation and gradient descent which adjusts kernel values to minimize loss function of the network. This allows the kernels to become better at extracting useful features.

4. Feature Maps

Output of applying a kernel to a image is called a feature map or activation map. They shows specific features which were detected in the input data. For example one kernel might be designed to detect vertical edges and when applied to the image, it generates a feature map that highlights areas where vertical edges are present.

5. Stacking Multiple Kernels

Multiple kernels are used at each layer of a CNN which allows network to extract various features at each layer. The outputs from these kernels can be stacked to form the input for the next layer helps in creating a hierarchy of features from simple to complex as we move deeper into the network.

Types of Kernels

Different types of kernels are used to extract different features from the input data. Some common types of kernels include:

1. Edge Detection Kernels

These kernels are designed to highlight vertical, horizontal or diagonal edges within an image which are important for recognizing objects. Some of them are:

- Sobel Filter: Used to find horizontal or vertical edges. It focuses pixels where there are sudden intensity changes.

- Prewitt Filter: Similar to Sobel but uses a different weighting in the matrix to detect edges.

- Laplacian Filter: Detects edges based on the second derivative of the image which provides a more detailed detection that includes diagonal edges.

2. Sharpening Kernels

It enhance edges and details in a image which makes it clearer and more defined. For example the following kernel sharpens an image by focusing the differences between neighboring pixels:

Example of a Sharpening Kernel:

[ 0, -1, 0]

[-1, 5, -1]

[ 0, -1, 0]

This kernel shows the differences between neighboring pixel values and the current pixel helps in making edges more distinct.

3. Smoothing (Blurring) Kernels

Smoothing kernels are used to reduce noise and detail in images which is useful in pre-processing of images before extracting higher-level features or to remove noise.

- Box Blur: Averages the surrounding pixels.

- Gaussian Blur: Applies a weighted average which gives more importance to pixels closer to the center.

4. Embossing Kernels

These are used to create a 3D effect by highlighting edges and providing a shadow on the other side. This helps in textural analysis or enhancing visual images.

Example of an Embossing Kernel:

[-2, -1, 0]

[-1, 1, 1]

[ 0, 1, 2]

5. Custom Kernels

Custom kernels learns from data itself during training which helps network to improve and learn best features for the task such as recognizing faces or detecting objects. Learning kernels from data allows the network to adapt to different tasks and perform better over time.

6. Frequency-Specific Kernels

It focuses on different details of an image. High-pass filters detect fine details like edges by focusing on high-frequency components while low-pass filters capture smooth areas or large patterns by focusing on low-frequency components. These filters helps network to highlight or smooth out specific features in an image depending on what is needed for the task.

How Kernels Operate in a CNN?

Kernel Working

Kernel WorkingVarious steps involved in how kernels work in a CNN during the convolution operation are:

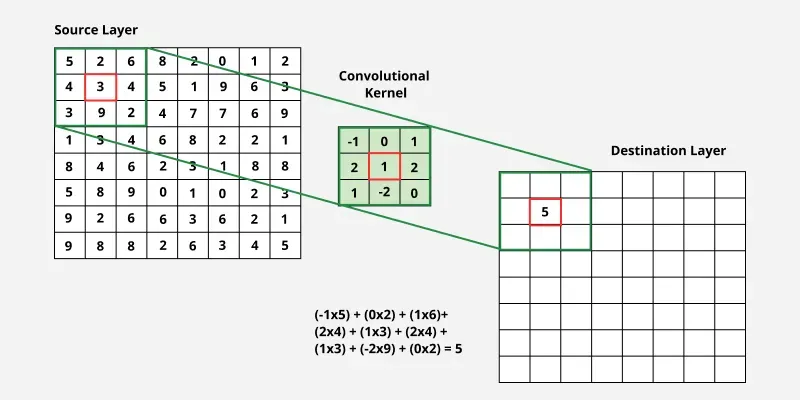

1. Initial Placement

Kernel starts at the top-left corner of the input image. This ensures that the kernel scans every part of the image as it moves across. In this above image, both the input image and the kernel are 3×3 matrices.

2. Dot Product Calculation

1. Element-wise Multiplication: It multiplies its values with the corresponding values of the image at the current position.

2. Summation: Results of these multiplications are added together to produce a single output value and this value represents how well the section of the image matches the pattern defined by the kernel.

For the first position, we multiply the corresponding elements of the kernel and the input image:

(−1×5)+(0×2)+(1×6)+(2×4)+(1×3)+(2×4)+(1×3)+(−2×9)+(0×2)

Breaking it down: (−5)+(0)+(6)+(8)+(3)+(8)+(3)+(−18)+(0)= 5

3. Recording the Output

- Output value i.e 5 here is from the summation is stored in a feature map at the corresponding location.

- This output or activation shows how strongly kernel detects certain features like edges or textures in the image.

- Feature Map after the operation: [5]

4. Sliding the Kernel

- It moves across the image and repeats the process at each position. The stride controls how far the kernel moves each time (usually 1 or 2 pixels).

- Edge coverage: If the kernel reaches the image edges, padding i.e adding extra pixels is used to allow the kernel to fit at the edges.

5. Complete Coverage

- After applying the kernel to the entire image the feature map has been fully generated. In this case the feature map contains just one value 5 which represents how much the kernel matched the pattern in the image at that position.

We will be using Tensorflow and Numpy libraries to implement this. Here we are performing convolution on a 5x5 grayscale image using a kernel of size 3x3.

- np.random.random([1, 5, 5, 1]): Creates a single 5x5 image with one channel filled with random values and the batch size is 1.

- tf.keras.layers.Conv2D: Defines a convolutional layer in TensorFlow using Keras. This layer has 1 filter of size 3x3 with a stride of 1 and 'valid' padding which means no padding is applied (the output size is reduced).

Python

import tensorflow as tf

import numpy as np

np.random.seed(0)

input_image = np.random.random([1, 5, 5, 1])

conv_layer = tf.keras.layers.Conv2D(filters=1, kernel_size=(3, 3), strides=(1, 1), padding='valid')

output = conv_layer(input_image)

print("Shape of output:", output.shape)

print("Output of the convolution:", output.numpy())

Output:

Convolution using Kernel

Convolution using KernelOperation of kernels in Convolutional Neural Networks (CNNs) is a important as it allows these networks to learn and detect specific patterns in images such as edges, textures and shapes by systematically applying filters across the image.