Feature Selection using Chi-Square Test

Last Updated :

15 May, 2025

Feature selection is an important step in building machine learning models. It helps improve model performance by selecting only the most relevant features, reducing noise and computational cost. One common method for feature selection in classification problems is the Chi-Square test.

Chi-Square test measures the relationship between categorical independent variables and a categorical target variable. It helps us to find if an independent variable is related to the target variable or not. We use it when:

- The target variable is categorical like Yes/No, Pass/Fail, Spam/Not Spam.

- The independent variables are also categorical like Gender, Education Level, Purchase Category.

Steps for Feature Selection Using Chi-Square Test

- Prepare the Data: Ensure that both the independent variables and target variable are categorical.

- Convert Categories to Numbers: Use encoding techniques like Label Encoding or One-Hot Encoding.

- Compute Chi-Square Scores: Calculate the Chi-Square statistic for each feature relative to the target variable.

- Select Top Features: Choose features with the highest Chi-Square values as they have the strongest relationship with the target variable.

For better understanding refer to: Chi-square test in Machine Learning

Real-World Example: Customer Purchase Prediction

We will use a real-world dataset from: Predict Customer Purchase Behavior Dataset. This dataset contains demographic information, purchasing habits and other important features to predict customer purchase behavior.

Step 1: Loading and Preparing the Dataset

We'll be using Pandas library for loading the dataset into a pandas DataFrame. You can download the dataset from here.

Python

import pandas as pd

df = pd.read_csv('customer_purchase_behavior.csv')

print(df.head())

Output:

Dataset

DatasetThis step helps us get an initial idea of the data and understand the structure of the dataset.

Step 2: Data Summary

We summarize the dataset to understand its properties.

Python

print(df.info())

print(df.describe(include='all'))

Output:

Data Summary

Data SummaryThis provides an overview of the data types, missing values and statistical distributions.

Step 3: Data Cleaning

Cleaning the data ensures that it is suitable for analysis. We check and remove missing values. This ensures our dataset is complete and ready for processing.

Python

print(df.isnull().sum())

df = df.dropna()

print(df.isnull().sum())

Output:

Data Cleaning

Data CleaningStep 4: Feature Encoding

Since the Chi-Square test requires numerical input so we convert categorical variables into numbers using label encoding. This step ensures that categorical variables can be used in statistical tests and machine learning models.

Python

from sklearn.preprocessing import LabelEncoder

le = LabelEncoder()

categorical_features = ['Gender', 'Age', 'AnnualIncome', 'ProductCategory']

for feature in categorical_features:

df[feature] = le.fit_transform(df[feature])

df['Purchase'] = le.fit_transform(df['PurchaseStatus'])

Step 5: Applying Chi-Square Test

Now we will apply the Chi-Square test to determine which features are most relevant to the target variable. This step helps identify the features with the strongest relationship to customer purchases.

Python

from sklearn.feature_selection import chi2, SelectKBest

X = df[categorical_features]

y = df['Purchase']

selector = SelectKBest(score_func=chi2, k=2)

X_new = selector.fit_transform(X, y)

feature_scores = selector.scores_

selected_features = X.columns[selector.get_support()]

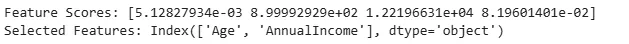

print("Feature Scores:", feature_scores)

print("Selected Features:", selected_features)

Output:

Result

ResultThis test assigns higher scores to features that are strongly related to the target variable. Based on these scores we select the top features that contribute most to predicting purchases. Like the results show the following scores:

- Gender: 0.0051

- Age: 899.99

- Annual Income: 12,219.66

- Product Category: 0.0819

From these scores Age and Annual Income have the highest Chi-Square values meaning they are the most relevant features for predicting customer purchase behavior. These features will be selected for the model while Gender and Product Category will be excluded as they have a weak statistical relationship with the target variable.