Data Structures and Algorithms (DSA) refer to the study of methods for organizing and storing data and the design of procedures (algorithms) for solving problems, which operate on these data structures. DSA is one of the most important skills that every computer science student must have. It is often seen that people with good knowledge of these technologies are better programmers than others and thus, crack the interviews of almost every tech giant, including companies like Google, Microsoft, Amazon, and Facebook (now Meta). This DSA tutorial aims to help you learn Data Structures and Algorithms (DSA) quickly and easily.

Data Structure & Algorithm Tutorial

Data Structure & Algorithm TutorialIntroduction to DSA

Data Structures and Algorithms (DSA) are fundamental in computer science that help us to organize and process data efficiently. They are used in solving common software challenges, from managing large data sets to optimizing the speed of tasks. Here’s why DSA is essential:

- DSA helps in storing and managing data efficiently, making it easier to retrieve and use when needed.

- Whether it’s finding the shortest path in a GPS system or optimizing search results in a search engine, DSA play an important role to solve such problems.

- By understanding DSA, you can design systems more efficiently, which is very important in areas like web applications, databases, and machine learning etc.

Mastering DSA is not only important for developing high-quality software but also for career growth. Top Companies like Google, Microsoft, Amazon, Apple, Meta and many other companies heavily focus on data structures and algorithms during interviews. Learning DSA will boost your problem-solving abilities and make you a stronger programmer in today’s competitive tech world.

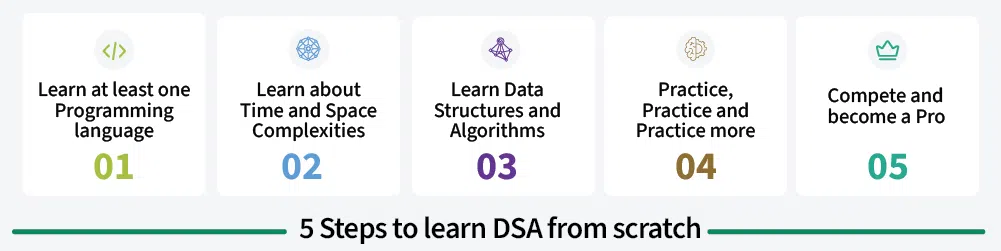

The first and foremost thing is dividing the total procedure into little pieces which need to be done sequentially. The complete process to learn DSA from scratch can be broken into 5 parts:

- Learn at-least one programming language (C++, Java, Python or JavaScript)

- Learn Data Structures

- Learn Algorithms

- Learn about Time and Space complexities

- Practice Problems on DSA

Hoping you have learned a programming language of your choice, let us move forward with the next step to learn DSA in this DSA tutorial.

Here comes the most important and the most awaited stage of the roadmap for learning data structure and algorithm - the stage where you start learning about DSA.

The topic of DSA consists of two parts:

Though they are two different things, they are highly interrelated, and it is very important to follow the right track to learn them most efficiently. If you are confused about which one to learn first, we recommend you to go through our detailed analysis on the topic: What should I learn first- Data Structures or Algorithms?

In Data Structures and Algorithms (DSA), the main goal is to solve problems effectively and efficiently. To determine the efficiency of a program, we look at two types of complexities:

- Time Complexity: This tells us how much time our code takes to run.

- Space Complexity: This tells us how much memory our code uses.

To compare efficiencies of algorithms, we use asymptotic notation, a mathematical tool that estimates time based on input size without running the code. It focuses on the number of basic operations in the program.

| Notation | Description |

|---|

| Big-O (Ο) | Describes the worst-case scenario, providing an upper time bound of algorithm. |

| Omega (Ω) | Describes the best-case scenario, offering a lower time bound of algorithm. |

| Theta (θ) | Represents the average complexity of an algorithm of algorithm. |

The most commonly used notation for code analysis is Big O Notation, providing an upper limit on the running time or memory usage concerning the input size.

Related Topics:

2. Mathematical and Bitwise Algorithms

Mathematical algorithms are a class of algorithms that solve problems related to mathematical concepts. They are widely used in various fields, including Computer graphics, Numerical analysis, Optimization and Cryptography.

| Algorithm | Description |

|---|

| GCD and LCM | Find the greatest common divisor and least common multiple of two numbers. |

| Prime Factorization | Decompose a number into its prime factors. |

| Fibonacci Numbers | Generate the Fibonacci sequence, where each number is the sum of the two preceding ones. |

| Catalan Numbers | Count the number of valid expressions with a given number of pairs of parentheses. |

| Modular Arithmetic | Perform arithmetic operations on numbers modulo a given value. |

| Euler Totient Function | Count the number of positive integers less than a given number that are relatively prime to it. |

| nCr Computations | Calculate the binomial coefficient, which represents the number of ways to choose r elements from a set of n elements. |

| Prime Numbers and Primality Tests | Determine whether a given number is prime and find prime numbers efficiently. |

| Sieve Algorithms | Find all prime numbers up to a given limit. |

Related Topics:

Bitwise algorithms are algorithms that operate on individual bits of numbers. These algorithms manipulate the binary representation of numbers to perform tasks such as bit manipulation, bitwise logical operations (AND, OR, XOR), shifting bits, and setting or clearing specific bits within a number. Bitwise algorithms are commonly used in low-level programming, cryptography, and optimization tasks where efficient manipulation of individual bits is required.

| Topic | Description |

|---|

| Bit Shifting | Shifts bits to the left or right by a specified number of positions. |

| Left shift (<<) | Shifts bits to the left, effectively multiplying the number by 2. |

| Right shift (>>) | Shifts bits to the right, effectively dividing the number by 2. |

| Extract bits | Using masks to extract specific bits from an integer. |

| Setting bits | Using masks to set specific bits to 1 in an integer. |

| Clearing bits | Using masks to set specific bits to 0 in an integer. |

| Toggling bits | Using XOR (^) to toggle specific bits in an integer. |

| Counting Set bits | Counting the number of set bits (1s) in an integer. |

Related Topics:

Array is a linear data structure that stores a collection of elements of the same data type. Elements are allocated contiguous memory, allowing for constant-time access. Each element has a unique index number.

- Operations on Array:

- Traversal: Iterating through the elements of an array.

- Insertion: Adding an element to the array at a specific index.

- Deletion: Removing an element from the array at a specific index.

- Searching: Finding an element in the array by its value or index.

- Types of Arrays:

- One-dimensional array: A simple array with a single dimension.

- Multidimensional array: An array with multiple dimensions, such as a matrix.

- Applications of Array:

- Storing data in a sequential manner

- Implementing queues, stacks, and other data structures

- Representing matrices and tables

- Related Topics on Array:

A matrix is a two-dimensional array of elements, arranged in rows and columns. It is represented as a rectangular grid, with each element at the intersection of a row and column.

- Key Concepts:

- Rows: Horizontal lines of elements in a matrix.

- Columns: Vertical lines of elements in a matrix.

- Dimensions: The number of rows and columns in a matrix (e.g., a 3x4 matrix has 3 rows and 4 columns).

- ElementAccess: Elements can be accessed using row and column indices (e.g., M[2][3] refers to the element in row 2, column 3).

- Applications of Matrix/Grid:

- Image processing

- Data analysis

- Optimization problems

- Related posts:

A string is a sequence of characters, typically used to represent text. It is considered a data type that allows for the manipulation and processing of textual data in computer programs.

- Operations on String:

- Concatenation: Joining two strings together.

- Comparison: Comparing two strings lexicographically.

- Search: Searching for a substring within a string.

- Modification: Changing or replacing characters within a string.

- Applications of String:

- Text processing

- Pattern matching

- Data validation

- Database management

- Related posts:

Stack is a linear data structure that follows a particular order in which the operations are performed. The order may be LIFO(Last In First Out) or FILO(First In Last Out). LIFO implies that the element that is inserted last, comes out first and FILO implies that the element that is inserted first, comes out last.

- Operation on Stack:

- Push: Adds an element to the top of the stack

- Pop: Removes and returns the element at the top of the stack

- Peek: Returns the element at the top of the stack without removing it

- Size: Returns the number of elements in the stack

- IsEmpty: Checks if the stack is empty

- Applications of Stack:

- Function calls

- Expression evaluation

- Backtracking

- Undo/redo operations

- Related Topics on Stack:

A Queue Data Structure is a fundamental concept in computer science used for storing and managing data in a specific order. It follows the principle of “First in, First out” (FIFO), where the first element added to the queue is the first one to be removed

- Operation on Queue:

- Enqueue: Adds an element to the rear of the queue

- Dequeue: Removes an element from the front of the queue

- Peek: Retrieves the front element without removing it

- IsEmpty: Checks if the queue is empty

- IsFull: Checks if the queue is full

- Type of Queue:

- Applications of Queue:

- Job scheduling

- Message queuing

- Simulation modeling

- Data buffering

- Related Topics:

Recursion is a programming technique where a function calls itself within its own definition. It is usually used to solve problems that can be broken down into smaller instances of the same problem. For Example:Towers of Hanoi (TOH), Factorial Calculation and Fibonacci Sequence etc.

Steps:

- Base Case: Define a condition that stops the recursive calls and provides a solution.

- Recursive Case: Define the steps to break down the problem into smaller instances and make recursive calls.

- Return: Return the solution from the recursive calls and combine them to solve the original problem.

The point which makes Recursion one of the most used algorithms is that it forms the base for many other algorithms such as Tree traversals, Graph traversals, Divide and Conquers Algorithms and Backtracking algorithms.

Related Topics:

As mentioned earlier, the Backtracking algorithm is derived from the Recursion algorithm, with the option to revert if a recursive solution fails, i.e. in case a solution fails, the program traces back to the moment where it failed and builds on another solution. So basically it tries out all the possible solutions and finds the correct one.

Some important and most common problems of backtracking algorithms, that you must solve before moving ahead, are:

| Problem | Description |

|---|

| Knight's tour problem | Finding a sequence of moves by a knight on a chessboard such that it visits every square exactly once. |

| Rat in a Maze | Finding a path from the starting position to the exit in a maze, with obstacles represented as walls. |

| N-Queen Problem | Placing N queens on an N×N chessboard such that no two queens attack each other. |

| Subset Sum Problem | Determining whether there exists a subset of the given set with a given sum. |

| Sudoku | Solving a 9x9 grid puzzle with numbers from 1 to 9 such that each row, column, and 3x3 subgrid contains all the digits without repetition. |

Related Article:

Divide and conquer algorithms follow a recursive strategy to solve problems by dividing them into smaller subproblems, solving those subproblems, and combining the solutions to obtain the final solution.

Steps:

- Divide: Partition the problem into smaller, independent subproblems.

- Conquer: Recursively solve each subproblem.

- Combine: Merge the solutions of the subproblems to obtain the final solution.

Examples:

- Merge Sort: Divides the array into two halves, sorts each half recursively, and merges the sorted halves.

- Quick Sort: Selects a pivot element, partitions the array into two subarrays based on the pivot, and recursively sorts each subarray.

Related Articles:

11. Searching and Sorting Algorithms

Searching algorithms are used to locate specific data within a larger set of data. It helps find the presence of a target value within the data. There are various types of searching algorithms, each with its own approach and efficiency.

- Most common searching algorithms:

- Linear Search: Iterative search from one end to the other.

- Binary Search: Divide-and-conquer search on a sorted array, halving the search space at each iteration.

- Ternary Search: Divide-and-conquer search on a sorted array, dividing the search space into three parts at each iteration.

- Other searching algorithms:

- Related Topics:

Sorting algorithms are used to arranging the elements of a list in a specific order, such as numerical or alphabetical. It organizes the items in a systematic way, making it easier to search for and access specific elements.

There are a lot of different types of sorting algorithms. Some widely used algorithms are:

| Sorting Algorithm | Description |

|---|

| Bubble Sort | Iteratively compares adjacent elements and swaps them if they are out of order. The largest element "bubbles" to the end of the list with each pass. |

| Selection Sort | Finds the minimum element in the unsorted portion of the list and swaps it with the first element. Repeats this process until the entire list is sorted. |

| Insertion Sort | Builds the sorted list one element at a time by inserting each unsorted element into its correct position in the sorted portion. |

| Quick Sort | A divide-and-conquer algorithm that selects a pivot element, partitions the list into two sublists based on the pivot, and recursively applies the same process to the sublists. |

| Merge Sort | Another divide-and-conquer algorithm that recursively divides the list into smaller sublists, sorts them, and then merges them back together to obtain the sorted list. |

Related Topics:

A linked list is a linear data structure that stores data in nodes, which are connected by pointers. Unlike arrays, linked lists are not stored in contiguous memory locations.

- Characteristics of Linked List:

- Dynamic: Linked lists can be easily resized by adding or removing nodes.

- Non-contiguous: Nodes are stored in random memory locations and connected by pointers.

- Sequential access: Nodes can only be accessed sequentially, starting from the head of the list.

- Operations on Linked List:

- Creation: Creating a new linked list or adding a new node to an existing list.

- Traversal: Iterating through the list and accessing each node.

- Insertion: Adding a new node at a specific position in the list.

- Deletion: Removing a node from the list.

- Search: Finding a node with a specific value in the list.

- Types of Linked List:

- Applications of Linked List:

- Linked lists are used in various applications, including:

- Implementing queues and stacks

- Representing graphs and trees

- Maintaining ordered data

- Memory management

- Related Topics:

Hashing is a technique that generates a fixed-size output (hash value) from an input of variable size using mathematical formulas called hash functions. Hashing is used to determine an index or location for storing an item in a data structure, allowing for efficient retrieval and insertion.

- Key Concepts:

- Hash Function: A mathematical function that maps an input to a hash value.

- Hash Table: A data structure that stores key-value pairs, where the key is a hash value and the value is the actual data.

- Collision: When two different keys produce the same hash value.

- Types of Hash Functions:

- Division Method: Divides the input by a constant and uses the remainder as the hash value.

- Mid Square Method: Squares the input and takes the middle digits as the hash value.

- Folding Method: Divides the input into equal-sized blocks and adds them together to get the hash value.

- Multiplication Method: Multiplies the input by a constant and takes the fractional part as the hash value.

- Collision Resolution Techniques:

- Applications of Hashing:

- Efficiently storing and retrieving data in databases and file systems.

- Verifying passwords and digital signatures.

- Distributing requests across multiple servers.

- Generating secure hashes for data integrity and authentication.

- Related posts:

A tree is a non-linear hierarchical data structure consisting of nodes connected by edges, with a top node called the root and nodes having child nodes. It is used in computer science for organizing data efficiently.

- Traversal of Tree: Tree traversal methods are used to visit and process nodes in a tree data structure. The three common traversal methods are:

- In-Order: Visit left subtree, current node, then right subtree.

- Pre-Order: Visit current node, left subtree, then right subtree.

- Post-Order: Visit left subtree, right subtree, then current node.

- Classifications of Trees:

- Classifications of Trees refer to grouping trees based on certain characteristics or criteria. This can involve categorizing trees based on their balance factor, degree of nodes, ordering properties, etc. Below are some important classification of Tree.

| Classification | Description | Type | Description |

|---|

By Degree | Trees can be classified based on the maximum number of children each node can have. | Binary Tree | Each node has at most two children. |

Ternary Tree | Each node has at most three children. |

N-ary Tree | Each node has at most N children. |

By Ordering | Trees can be classified based on the ordering of nodes and subtrees | Binary Search Tree (BST) | Left child < parent < right child for every node. |

Heap | Specialized binary tree with the heap property. |

By Balance | Trees can be classified based on how well-balanced they are. | Balanced Tree | Heights of subtrees differ by at most one. Examples include AVL trees and Red-Black trees. |

Unbalanced Tree | Heights of subtrees can differ significantly, affecting performance in operations like search and insertion. |

A Heap is a complete binary tree data structure that satisfies the heap property: for every node, the value of its children is less than or equal to its own value. Heaps are usually used to implement priority queues, where the smallest (or largest) element is always at the root of the tree.

- Operations of Heap:

- Insert: Adds a new element to the heap while maintaining heap properties.

- Extract-Max/Extract-Min: Removes the root element and restructures the heap.

- Increase/Decrease-Key: Updates the value of a node and restructures the heap.

- Types of Heap:

- Max-Heap: Root node has the maximum value among its children.

- Min-Heap: Root node has the minimum value among its children.

- Applications of Heap:

- Priority queues

- Sorting

- Graph algorithms (e.g., Dijkstra's algorithm)

- Related posts:

A Graph is a non-linear data structure consisting of a finite set of vertices(or nodes) and a set of edges that connect a pair of nodes.

- Traversals of Graph:

- Applications of Graphs:

- Social networks

- Maps and navigation

- Scheduling

- Data mining

- Related posts:

As the name suggests, this algorithm builds up the solution one piece at a time and chooses the next piece which gives the most obvious and immediate benefit i.e., which is the most optimal choice at that moment. So the problems where choosing locally optimal also leads to the global solutions are best fit for Greedy.

Some Important Problem of Greedy Algorithms are:

| Algorithm | Description |

|---|

| Fractional Knapsack | Find the maximum total value of items that can be placed in the knapsack, allowing fractional inclusion of items. |

| Dijkstra's Algorithm | Finds the shortest path from a source vertex to all other vertices in a weighted graph. |

| Kruskal's Algorithm | Finds the minimum spanning tree of a weighted graph. |

| Huffman Coding | Creates an optimal prefix code for a set of symbols, minimizing the total encoding length. |

Related Articles:

Dynamic Programming is a method used in mathematics and computer science to solve complex problems by breaking them down into simpler subproblems. By solving each subproblem only once and storing the results, it avoids redundant computations, leading to more efficient solutions for a wide range of problems.

Key Concepts:

- Optimal Substructure: The optimal solution to a problem can be constructed from the optimal solutions to its subproblems.

- Overlapping Subproblems: Subproblems are often repeated in the larger problem, leading to redundant computations.

- Memoization / Tabulation: Storing the solutions to subproblems to avoid recomputation.

Some important and most common problems of dynamic programming algorithms, that you must solve before moving ahead, are:

| Problem | Description |

|---|

| Fibonacci Sequence | Generating Fibonacci numbers using dynamic programming to avoid redundant calculations. |

| Longest Common Subsequence | Finding the longest subsequence common to two sequences. |

| Longest Increasing Subsequence | Finding the longest subsequence of a given sequence in which the elements are sorted in increasing order. |

| 0/1 Knapsack Problem | Determining the maximum value that can be obtained by selecting items with given weights and values, while not exceeding a specified weight limit. |

| Rod Cutting Problem | Maximizing the profit by cutting a rod of given length into pieces and selling them according to given prices. |

| Coin Change Problem | Determining the number of ways to make change for a given amount using a given set of coin denominations. |

| Edit Distance | Finding the minimum number of operations (insertion, deletion, substitution) required to convert one string into another. |

| Subset Sum Problem | Determining whether there exists a subset of a given set with a given sum. |

| Longest Palindromic Subsequence | Finding the longest subsequence of a given sequence that is a palindrome. |

| Maximum Subarray Sum | Finding the contiguous subarray with the largest sum within a given one-dimensional array. |

| Partition Equal Subset Sum | Determining whether it is possible to partition a given set into two subsets with equal sum. |

| Minimum Cost Path | Finding the minimum cost path from the top-left corner to the bottom-right corner of a given grid. |

| Maximum Product Subarray | Finding the contiguous subarray with the largest product within a given one-dimensional array. |

Related Articles:

Graph algorithms in data structures and algorithms (DSA) are a set of techniques and methods used to solve problems related to graphs, which are a collection of nodes and edges. These algorithms are designed to perform various operations on graphs, such as searching, traversing, finding the shortest path, and determining connectivity. They are essential for solving a wide range of real-world problems, including network routing, social network analysis, and resource allocation.

| Topic | Topic's Description | Algorithm | Algorithm's Description |

|---|

| Graph Traversal | Techniques for visiting all vertices in a graph. | Depth-First Search (DFS) | Explores as far as possible along each branch before backtracking. |

| Breadth-First Search (BFS) | Explores neighbor vertices before moving to the next level of vertices. |

Minimum Spanning Trees | Minimum Spanning Trees are the smallest trees that connect all nodes in a graph without forming cycles, achieved by adding the shortest edges possible. | Kruskal's Algorithm | It finds a minimum spanning tree for a connected weighted graph. It adds the smallest weight edge that does not form a cycle. |

Prim's Algorithm | It also finds a minimum spanning tree for a connected weighted graph. It adds the smallest weight edge that connects two trees. |

| Topological Sorting | Topological sorting is a linear ordering of the vertices in a directed acyclic graph (DAG) such that for every directed edge from vertex u to vertex v, u comes before v in the ordering. | Kahn's Algorithm | Kahn's Algorithm finds a topological ordering of a directed acyclic graph (DAG). |

| DFS-based Algorithm | DFS-based Algorithm use Depth-First Search to perform topological sorting in a directed acyclic graph (DAG). |

Shortest Path | A shortest path in a graph is the path between two vertices in a graph that has the minimum sum of weights along its edges compared to all other paths between the same two vertices. | Dijkstra's Algorithm | Greedy algorithm to find the shortest path between all nodes in O(E * V logV) time. |

Floyd-Warshall Algorithm | Finds the shortest path between all pairs of nodes in O(V^3) time. |

Bellman Ford Algorithm | Finds the shortest path from a single source in O(V * E) time. |

Johnson Algorithm | Finds the shortest paths between all pairs of vertices in O(V^2 logV + V * E) time. |

Strongly Connected Components | A strongly connected component (SCC) of a directed graph is a maximal set of vertices such that there is a path from every vertex in the set to every other vertex in the set. | Kosaraju's Algorithm | Kosaraju's Algorithm is a two-pass algorithm that efficiently finds the strongly connected components (SCCs) of a directed graph. |

Tarjan's Algorithm | Tarjan's Algorithm is another efficient algorithm for finding SCCs in a directed graph |

Related Topics:

Pattern searching is a fundamental technique in DSA used to find occurrences of a specific pattern within a given text.

Below are some some standard pattern searching algorithms:

| Algorithm | Description | Time Complexity |

|---|

| Brute-Force | It compares the pattern with every substring of the text | O(mn) |

| Knuth-Morris-Pratt | It uses a precomputed failure function to skip unnecessary comparisons | O(m + n) |

| Boyer-Moore | It compares the pattern from right to left, skipping characters based on the last mismatch | O(mn) |

| Rabin-Karp | It uses hashing to quickly check for potential matches | O(m + n) |

Related Topics:

The Branch and Bound Algorithm is a method used in combinatorial optimization problems to systematically search for the best solution. It works by dividing the problem into smaller subproblems, or branches, and then eliminating certain branches based on bounds on the optimal solution. This process continues until the best solution is found or all branches have been explored.

Standard Problems on Branch and Bound Algorithm:

Related Topics:

Geometric algorithms are a class of algorithms that solve problems related to geometry. Geometric algorithms are essential for solving a wide range of problems in computer science, such as:

| Algorithm | Description |

|---|

| Convex Hull | Finds the smallest convex polygon that contains a set of points. |

| Closest Pair of Points | Finds the two points in a set that are closest to each other. |

| Line Intersection | Determines whether two lines intersect and, if so, finds the point of intersection. |

| Point in Polygon | Determines whether a given point is inside or outside a polygon. |

Related Topics:

Randomized algorithms are algorithms that use randomness to solve problems. They make use of random input to achieve their goals, often leading to simpler or more efficient solutions.

Types of Randomized Algorithms:

- Las Vegas: Always produces a correct result, but the running time is random.

- Monte Carlo: May produce an incorrect result with a small probability, but the running time is usually faster.

Important Algorithms that uses Randomization Algorithms:

| Algorithm | Description |

|---|

| QuickSort | A randomized sorting algorithm with an average-case time complexity of O(n log n). |

| Skip List | A randomized data structure that provides fast search and insertion operations. |

| Bloom Filter | A probabilistic data structure for efficient set membership testing. |

Practice Problem Cheat Sheets

Curated lists of problems from below articles:

Similar Reads

Top 75 DSA Questions In this post, we present a list of the top 75 data structures and algorithms (DSA) coding questions to help you prepare for a thorough revision for interviews at leading tech companies like Meta, Google, Amazon, Apple, Microsoft, etc. This list helps you to cover an extensive variety of DSA Coding q

2 min read

How to Start Learning DSA? In the journey of programming, every programmer comes across a point where they want to solve a problem in a more efficient way. While finding a way out of this, they eventually come to know about the term DSA. Now, before jumping into how to start learning DSA, let us first know what the term DSA a

13 min read

RoadMap for DSA in Python Mastering Data Structures and Algorithms (DSA) is key to optimizing code and solving problems efficiently. Whether you're building applications or preparing for tech interviews at companies like Google, Microsoft, or Netflix, DSA knowledge is crucial. This roadmap will guide you from basic concepts

4 min read

Complete Roadmap To Learn DSA Mastering DSA enables developers to write optimized code that performs well even with large datasets. The very first step in the journey is the selection of a complete roadmap. This guide provides a structured path, starting from basic concepts to advanced topics. It offers practical steps, resource

5 min read

How to improve your DSA skills? Enhancing data structure and algorithm skills allows for achieving proficiency in programming and excelling in technical interviews. These foundational skills enable efficient problem-solving and algorithm design, fostering success in the competitive world of software development and job interviews.

4 min read