-

-

Notifications

You must be signed in to change notification settings - Fork 17.1k

Description

📚 This guide explains how to use YOLOv5 🚀 model ensembling during testing and inference for improved mAP and Recall. UPDATED 25 September 2022.

Ensemble modeling is a process where multiple diverse models are created to predict an outcome, either by using many different modeling algorithms or using different training data sets. The ensemble model then aggregates the prediction of each base model and results in once final prediction for the unseen data. The motivation for using ensemble models is to reduce the generalization error of the prediction. As long as the base models are diverse and independent, the prediction error of the model decreases when the ensemble approach is used. The approach seeks the wisdom of crowds in making a prediction. Even though the ensemble model has multiple base models within the model, it acts and performs as a single model.

Before You Start

Clone repo and install requirements.txt in a Python>=3.7.0 environment, including PyTorch>=1.7. Models and datasets download automatically from the latest YOLOv5 release.

git clone https://github.com/ultralytics/yolov5 # clone

cd yolov5

pip install -r requirements.txt # installTest Normally

Before ensembling we want to establish the baseline performance of a single model. This command tests YOLOv5x on COCO val2017 at image size 640 pixels. yolov5x.pt is the largest and most accurate model available. Other options are yolov5s.pt, yolov5m.pt and yolov5l.pt, or you own checkpoint from training a custom dataset ./weights/best.pt. For details on all available models please see our README table.

$ python val.py --weights yolov5x.pt --data coco.yaml --img 640 --halfOutput:

val: data=./data/coco.yaml, weights=['yolov5x.pt'], batch_size=32, imgsz=640, conf_thres=0.001, iou_thres=0.65, task=val, device=, single_cls=False, augment=False, verbose=False, save_txt=False, save_hybrid=False, save_conf=False, save_json=True, project=runs/val, name=exp, exist_ok=False, half=True

YOLOv5 🚀 v5.0-267-g6a3ee7c torch 1.9.0+cu102 CUDA:0 (Tesla P100-PCIE-16GB, 16280.875MB)

Fusing layers...

Model Summary: 476 layers, 87730285 parameters, 0 gradients

val: Scanning '../datasets/coco/val2017' images and labels...4952 found, 48 missing, 0 empty, 0 corrupted: 100% 5000/5000 [00:01<00:00, 2846.03it/s]

val: New cache created: ../datasets/coco/val2017.cache

Class Images Labels P R [email protected] [email protected]:.95: 100% 157/157 [02:30<00:00, 1.05it/s]

all 5000 36335 0.746 0.626 0.68 0.49

Speed: 0.1ms pre-process, 22.4ms inference, 1.4ms NMS per image at shape (32, 3, 640, 640) # <--- baseline speed

Evaluating pycocotools mAP... saving runs/val/exp/yolov5x_predictions.json...

...

Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.504 # <--- baseline mAP

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.688

Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=100 ] = 0.546

Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.351

Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.551

Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.644

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 1 ] = 0.382

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 10 ] = 0.628

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.681 # <--- baseline mAR

Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.524

Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.735

Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.826Ensemble Test

Multiple pretraind models may be ensembled togethor at test and inference time by simply appending extra models to the --weights argument in any existing val.py or detect.py command. This example tests an ensemble of 2 models togethor:

- YOLOv5x

- YOLOv5l6

python val.py --weights yolov5x.pt yolov5l6.pt --data coco.yaml --img 640 --halfOutput:

val: data=./data/coco.yaml, weights=['yolov5x.pt', 'yolov5l6.pt'], batch_size=32, imgsz=640, conf_thres=0.001, iou_thres=0.6, task=val, device=, single_cls=False, augment=False, verbose=False, save_txt=False, save_hybrid=False, save_conf=False, save_json=True, project=runs/val, name=exp, exist_ok=False, half=True

YOLOv5 🚀 v5.0-267-g6a3ee7c torch 1.9.0+cu102 CUDA:0 (Tesla P100-PCIE-16GB, 16280.875MB)

Fusing layers...

Model Summary: 476 layers, 87730285 parameters, 0 gradients # Model 1

Fusing layers...

Model Summary: 501 layers, 77218620 parameters, 0 gradients # Model 2

Ensemble created with ['yolov5x.pt', 'yolov5l6.pt'] # Ensemble notice

val: Scanning '../datasets/coco/val2017.cache' images and labels... 4952 found, 48 missing, 0 empty, 0 corrupted: 100% 5000/5000 [00:00<00:00, 49695545.02it/s]

Class Images Labels P R [email protected] [email protected]:.95: 100% 157/157 [03:58<00:00, 1.52s/it]

all 5000 36335 0.747 0.637 0.692 0.502

Speed: 0.1ms pre-process, 39.5ms inference, 2.0ms NMS per image at shape (32, 3, 640, 640) # <--- ensemble speed

Evaluating pycocotools mAP... saving runs/val/exp3/yolov5x_predictions.json...

...

Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.515 # <--- ensemble mAP

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.699

Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=100 ] = 0.557

Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.356

Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.563

Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.668

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 1 ] = 0.387

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 10 ] = 0.638

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.689 # <--- ensemble mAR

Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.526

Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.743

Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.844Ensemble Inference

Append extra models to the --weights argument to run ensemble inference:

python detect.py --weights yolov5x.pt yolov5l6.pt --img 640 --source data/imagesOutput:

detect: weights=['yolov5x.pt', 'yolov5l6.pt'], source=data/images, imgsz=640, conf_thres=0.25, iou_thres=0.45, max_det=1000, device=, view_img=False, save_txt=False, save_conf=False, save_crop=False, nosave=False, classes=None, agnostic_nms=False, augment=False, update=False, project=runs/detect, name=exp, exist_ok=False, line_thickness=3, hide_labels=False, hide_conf=False, half=False

YOLOv5 🚀 v5.0-267-g6a3ee7c torch 1.9.0+cu102 CUDA:0 (Tesla P100-PCIE-16GB, 16280.875MB)

Fusing layers...

Model Summary: 476 layers, 87730285 parameters, 0 gradients

Fusing layers...

Model Summary: 501 layers, 77218620 parameters, 0 gradients

Ensemble created with ['yolov5x.pt', 'yolov5l6.pt']

image 1/2 /content/yolov5/data/images/bus.jpg: 640x512 4 persons, 1 bus, 1 tie, Done. (0.063s)

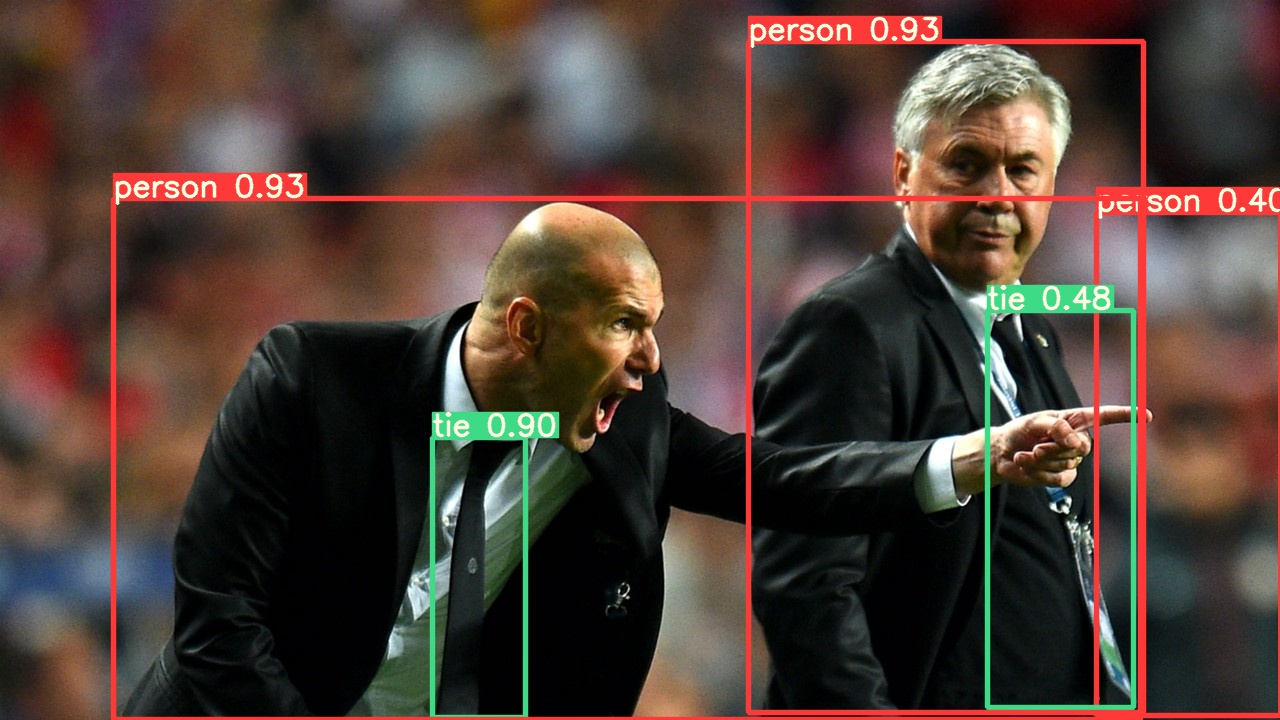

image 2/2 /content/yolov5/data/images/zidane.jpg: 384x640 3 persons, 2 ties, Done. (0.056s)

Results saved to runs/detect/exp2

Done. (0.223s)Environments

YOLOv5 may be run in any of the following up-to-date verified environments (with all dependencies including CUDA/CUDNN, Python and PyTorch preinstalled):

- Notebooks with free GPU:

- Google Cloud Deep Learning VM. See GCP Quickstart Guide

- Amazon Deep Learning AMI. See AWS Quickstart Guide

- Docker Image. See Docker Quickstart Guide

Status

If this badge is green, all YOLOv5 GitHub Actions Continuous Integration (CI) tests are currently passing. CI tests verify correct operation of YOLOv5 training, validation, inference, export and benchmarks on MacOS, Windows, and Ubuntu every 24 hours and on every commit.