NVIDIA Enterprise Reference Architectures (Enterprise RAs) can reduce the time and cost of deploying AI infrastructure solutions. They provide a streamlined approach for building flexible and cost-effective accelerated infrastructure while ensuring compatibility and interoperability.

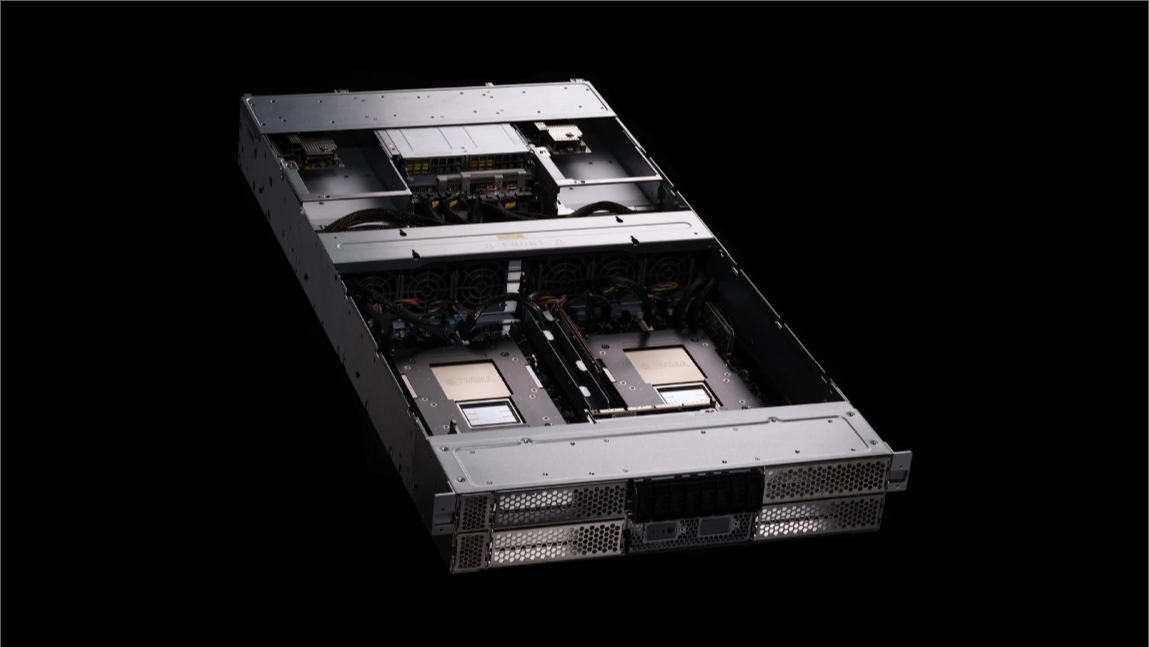

The latest Enterprise RA details an optimized cluster configuration for systems integrated with NVIDIA GH200 NVL2 and the NVIDIA Spectrum-X Ethernet networking platform for AI. The performance-enhancing “secret sauce” of the NVIDIA GH200 NVL2 is that two GH200 superchips are connected using NVLink to create a unique single memory domain that simplifies memory management while developing accelerated applications.

The NVIDIA GH200 Superchip connects a power-efficient NVIDIA Grace CPU and the powerful NVIDIA Hopper GPU with the high-bandwidth and memory-coherent NVIDIA NVLink-C2C for chip–to-chip interconnect. NVIDIA GH200 NVL2 combines two NVIDIA GH200 Superchips in a single node. Compute and memory-intensive workloads like single-node LLM inference, retrieval augmented generation (RAG), recommenders, graph neural networks (GNNs), high-performance computing (HPC), and data processing run impressively on the NVIDIA GH200 NVL2.

As detailed in this post, the two-Grace Hopper Superchip design of GH200 NVL2 works in concert with high-performance, low-latency Spectrum-X networking to unlock an unprecedentedly efficient path to developing AI. Together, the NVIDIA GH200 NVL2 and NVIDIA Spectrum-X networking platform are ideal for developers seeking the flexibility to build on CPUs, then expediently scale to GPUs.

Simplifying memory management with GH200 NVL2

GH200 NVL2 was designed to make it easier for developers to use and access system memory. The NVIDIA Grace Hopper architecture brings together the groundbreaking performance of the NVIDIA Hopper GPU with the versatility of the NVIDIA Grace CPU, connected with the high-bandwidth, memory-coherent 900 GB/s NVIDIA NVLink chip-to-chip (C2C) interconnect that delivers 7x the bandwidth of the PCIe Gen 5.

NVLink-C2C memory coherency increases developer productivity, performance, and the amount of GPU-accessible memory. CPU and GPU threads can concurrently and transparently access both CPU and GPU resident memory, enabling you to focus on algorithms instead of explicit memory management.

Unified memory model

The GH200 architecture integrates the NVIDIA Grace CPU and Hopper GPU using NVLink chip-to-chip (NVLink-C2C) enabling a unified memory model. The unified virtual memory model (UVM) allows CPU and GPU threads to access both CPU-resident and GPU-resident memory transparently, sharing a single address space and any transfers can get up to 7x the transfer speed of conventional systems connected over PCIe Gen 5. For a developer, UVM eases many scenarios such as memory oversubscription, on-demand paging, and data consistency. For example, as context windows, embedding tables or datasets exceed GPU memory the software stack can now seamlessly manage data movement.

High-bandwidth access

NVLink-C2C interconnect in the NVIDIA GH200 NVL2 offers up to 900 GB/s of bandwidth, a 7x speed increase compared to traditional server architecture. The high bandwidth enables the GPU to directly use the CPU memory, directly reducing the overhead associated with memory copying.

Table 1 shows a system comparison from the node hardware section of the latest Enterprise RA details the memory specifications in the GH200 and GH200 NVL2, respectively.

| Specification | NVIDIA GH200 Grace Hopper Superchip | NVIDIA GH200 NVL2 System |

| HBM3e memory per GPU | 144 GB HBM3e | 288 GB HBM3e |

| Fast memory per solution | Up to 624 GB | Up to 1248 GB |

| GPU memory bandwidth | Up to 4.9 TB/s | Up to 9.8 TB/s |

| Interconnect | CPU-GPU NVLink-C2C: 900 GB/s CPU-CPU NVLink-C2C: N/A GPU-GPU NVLink: N/A PCIe Gen5 x16: 128 GB/s | CPU-GPU NVLink-C2C: 900 GB/s CPU-CPU NVLink-C2C: 600 GB/s GPU-GPU NVLink: 900 GB/s PCIe Gen5 x16:128 GB/s |

Memory coherency

The Address Translation Services (ATS) mechanism enable the GH200 NVL2 to support a coherent memory model. Coherent memory enables native atomic operations (invisible read-modify-write operations on shared memory) from both the CPU and GPU. ATS in the GH200 NVL2 reduces the need for explicit memory management and copying.

Oversubscription of GPU memory

The architecture of this superchip intentionally enables the GPU in GH200 to oversubscribe its memory by using the NVIDIA Grace CPU memory at high bandwidth. Each Grace Hopper Superchip features up to 480 GB of LPDDR5X and 144 GB of HBM3e (1248 GB total in NVL2 as shown in Table 1). This means that significantly more memory is accessible compared to what is available with high-bandwidth memory (HBM) alone. GPU access to CPU memory further reduces the need for memory copying.

Accelerated bulk transfers

NVIDIA Hopper Direct Memory Access (DMA) copy engines round out the GH200 offensive lineup on memory copying. Supported by NVLink-C2C, NVIDIA Hopper DMA accelerates bulk transfers of pageable memory (memory that can flow in and out of the system) across the host and device.

These myriad technologies underpin efficiencies in the GH200 that negate the need for explicit memory copying. Figure 1 compares a discrete memory system (where copies are made) to a GH200, visualizing the difference in memory copying and movement. In the diagram, colorful balls represent memory copies across the discrete memory system. GH200 requires fewer balls and thus fewer copies.

Support for PyTorch in GH200 NVL2

A notable software update, optimized for GH200 unified memory, takes the system to the next level.

AI developers working at the framework level will appreciate recently added Universal Virtual Memory (UVM) support for PyTorch to GH200 NVL2. The AI framework runs exceptionally well on GH200 NVL2, thanks to Grace Hopper architecture that enables access to the entire memory pool. Developers don’t have to worry about sizing everything (models, batches, context, and so on) to fit in GPU memory when GH200 NVL2 is deliberately designed to permit oversubscribing GPU memory.

Prior development methods have forced an “always have some gas in your tank” behavior (leave GPU memory unused or risk triggering an error or deleterious oversubscription penalty). Not anymore. GH200 NVL2 is explicitly designed for GPUs to be oversubscribed, so you can use all the gas in your tank. To learn more about how GH200 offloads key-value cache (KV cache) calculations onto CPU memory to save valuable GPU resources, see NVIDIA GH200 Superchip Accelerates Inference by 2x in Multiturn Interactions with Llama Models.

Recommended configuration for GH200 NVL2

The new NVIDIA Enterprise RA for NVIDIA GH200 NVL2 and NVIDIA Spectrum-X includes a nomenclature update. The optimal server configuration for NVIDIA GH200 NVL2 and NVIDIA Spectrum-X networking platform is 2-2-3-400. As in earlier Enterprise RAs, the numbers in this multihyphenate refer to the number of sockets (CPUs), the number of GPUs, and the number of network adapters in the server. The fourth number, 400 in this case, now describes the east-west network bandwidth per GPU in gigabits per second.

Get started building with GH200 NVL2

With its simplified memory management, GH200 NVL2 is already enhancing performance in real-world applications. Multiturn inference, big data analytics, and large-scale data processing all directly benefit from the GH200 unified CPU+GPU architecture.

The 2-2-3-400 server configuration for GH200 NVL2 is supported by NVIDIA global partners, including HPE ProLiant Compute DL384 Gen12 and Supermicro ARS-221GL-NHIR.

The NVIDIA Enterprise RA for GH200 NVL2 is now available for partners to leverage the efficiency of unified memory to build scalable data center solutions, with proven and comprehensive design recommendations.

Learn more about NVIDIA Enterprise Reference Architectures and NVIDIA GH200 performance in the latest round of MLPerf Inference.

Join the NVIDIA Enterprise Reference Architecture team onsite at NVIDIA GTC 2025. To get your cluster design questions answered by an NVIDIA architect, add the session Everything You Want to Ask About NVIDIA Enterprise Reference Architectures [CWE74549] to your schedule.

The NVIDIA Grace Hopper team will also be hosting a series of GTC sessions, including:

- Get the Most Performance From Grace Hopper [S72687]

- Pioneering the Future of Data Platforms for the Era of Generative AI [S71650]

- Keep Your GPUs Going Brrr : Crushing Whitespace in Model Training [S73733]

- Accelerating Data Analytics Workflows on GPU Systems [CWE72882]

- Fortran Standard Parallelism on Grace Hopper With Unified Memory [P72780]

- Turbocharge Numerical Computing on GPU and CPU in C++ [CWE72491]

- Maximize AI Potential on Arm Neoverse Cores With NVIDIA’s Grace Hopper, Grace Blackwell, and Grace CPU Superchips [S74288 ] (Presented by Arm)

- Application Optimization for NVIDIA Grace CPU [S72978]

- What’s in Your Developer Toolbox? CUDA and Graphics Profiling, Optimization, and Debugging Tools [CWE72393]